Archive of posts from May 2008

-

Ethan's Name Plate

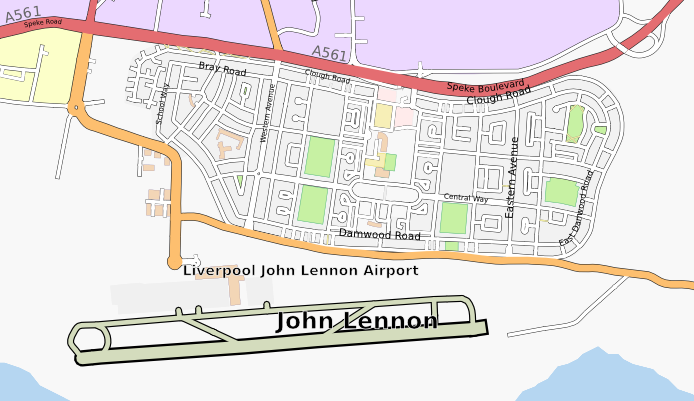

My daughter Evie was given a very nice thing soon after she was born - her name cut out of a piece of wood. My son Ethan however wasn’t bought such a thing and I decided that I should try to make something along the same lines. The idea developed into making a nameplate for his bedroom and after thinking about it for a little while I came up with an idea.

The laser cutter is still my favourite thing in the DoES Liverpool workshop, and the thing that I’m most capable of using, but it does have its limitations. It only produces flat things, it can only cut thicknesses of maybe 3-6mm, and it really produces things in only 1 or 2 colours (the colour of the material and whatever colour it turns out if you engrave).

I don’t entirely remember where the idea came from but I decided that I’d like to try layering multiple materials on top of each other to allow me to introduce more colours. I also came up with the idea of a forest scene. Part of the idea behind this was to give me the option of mixing wood and acrylic together in the piece. I decided on a night time scene as that feels like the most interesting time to be in a forest, with a full moon beaming down and some of the nocturnal creatures coming out.

I don’t really think of myself as a very artistic person but I did want to avoid simply pulling together clip art so I decided that I really had to create all the elements that I was going to use by myself. As I work most of the week and do childcare most of the other times I really just had short snippets of time to work on the project during the evenings. I find this can actually work quite well sometimes as it forces me to think about things more rather than just diving in and rushing things. Although it can be a little frustrating when a few days goes past and all you’ve done is draw a few branches!

For the night sky I decided to try a few things that might make it more interesting. Laminated acrylic is a thin plastic material that is made by layering two colours of material together. One of the layers is particularly thin and is ideal for engraving. Generally when you engrave acrylic on a laser the result is actually really subtle. Whereas with plywood where you go from a quite light brown to a much darker brown, acrylic doesn’t really change colour, rather it’s more the shadow caused by the indent that you see. With the laminated acrylic you can get a much more noticeable difference as the thinner layer gets engraved away showing a completely different colour underneath. Looking at the colours of laminated acrylic available there was a nice black on white option that would really work well for a night sky as I could engrave away the black to reveal stars and the moon.

Going for realism I also decided that I should really make my moon more than just a big white circle. Fortunately just a few weeks earlier there had been a super moon and lots of people, me included, had taken photos of it. I used one of the pictures I had taken and applied the “Posterise” filter in the Gnu Image Manipulation Program (GIMP) to reduce it to just a few colours. I then used Inkscape’s “Trace Bitmap” feature to convert this into vectors suitable for the laser cutter. Even with the laminated plastic you still only really get 2 colour options but I decided to try engraving the material twice, so that I engraved the black to reveal white underneath, and then on top of that white did some further engraving to add texture to my moon. The effect actually came out really well, I was very happy with the result.

NASA release most (all?) of their imagery under a public domain license so there’s a few more little items of interest that I added but will leave them to be found rather than describing them here.

The sky layer was quite simple to do structurally as it simply consisted of a rounded box with the moon and some stars engraved on top. When it came to the other layers I was going to have to start cutting elements out, allowing the layers below to show through, but I needed to do this while making sure the whole piece was structurally sound and that pieces wouldn’t either fall through following the laser cutting, or break off easily. Fortunately even in a forest you’re going to get some overlap so I just needed to make sure that my trees overlapped enough to touch the sides and each other, whilst leaving enough space to see the sky below. I was intending to build the trees up from two layers, one for the branches and another for the foliage but again I wanted to make sure I left enough gaps in the foliage so that you could actually see and appreciate the tree trunks I’d put so much time into designing. I did this by mixing some conifer trees with some deciduous which gave me good opportunities to show the trunks. I do like the idea of having hidden elements which, even though they won’t necessarily be seen, I have still put some care and attention into having them look at their best.

The foliage for a conifer tree is fairly simple to do, if you can imagine a child’s drawing of a Christmas tree you can imagine my artistic prowess! I wanted to add some texture to these too rather than having a plain green layer so I added some zig-zag shapes to further suggest foliage. My first attempt at doing this was to draw these shapes as lines which I cut on the laser at high speed and low power so that the line was simply engraved, but I found this gave a much narrower line than I wanted and didn’t look particularly good, so to get the best result I increased the stroke width of my lines in Inkscape then used the “Stroke to Path” menu option to turn this into a polygon that could be engraved properly. The foliage layer was again a little tricky as I had to make sure that the tree touched the frame enough that they wouldn’t break. I had to balance this with allowing enough space so that the layers below would show through. Fortunately in the final product this layer would be sandwiched by other layers and as this isn’t intended as a toy there shouldn’t be too much risk of breakage.

As I’ve said this method of working was limiting my colour options. I was intending to use plywood for the branches of the trees and green perspex for the foliage so I decided to add some woodland creatures to the tree branch layer so that I could reuse the brown for fur, as it happens they’re mostly blocked out by the other layers but there’s definitely a little crudely drawn squirrel peeping out.

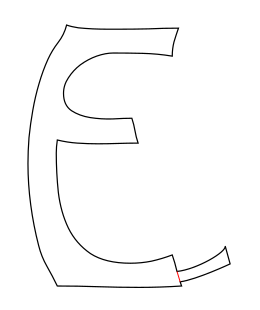

The final layer was of course the text layer. Again I decided to use plywood for this layer. I didn’t want to overcomplicate things and it felt like interchanging plywood and acrylic for every other layer would give a nice effect. It actually took me quite a long time to find just the right font. I had some idea in mind of swirly lettering but couldn’t quite work out what I wanted, I forget what search terms I was using but I think I was looking for something vaguely Celtic. In the end I found Ober Tuerkheim which gives a great effect. Again I had to work to make sure that the letters would be properly attached to the frame and wouldn’t be likely to break.

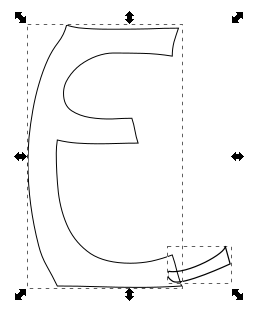

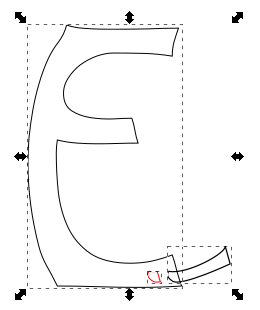

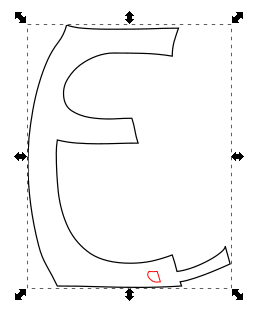

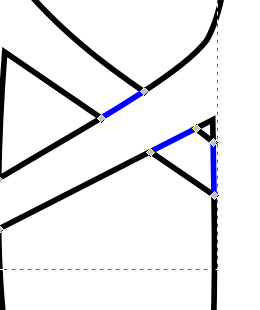

As you can see the first and last letters overlapped the frame, and the “h” was large enough to overlap the frame at the bottom, even breaking the fourth wall. To solve the issue of the other letters I ended up connecting them with small tabs. I can’t quite remember where I got the shapes for the tabs, quite possibly it was a hyphen or other shape from the font, I then moved it around and rotated it to look just right. I didn’t particularly want the tabs to be a feature of the design though so I ended up removing the ends of them. This was actually a fairly simple though time consuming process which I had used multiple times in the project to deal with overlaps, e.g the overlapping branches of the tree. Whenever I had an overlap I would want to only cut the outside of the shapes, and then etch a line to show the overlapping part. Here’s a breakdown of how this worked for the letters

- Letter and tab

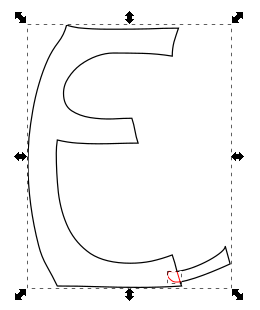

- Duplicate both paths and use the intersection tool (I’ve shifted it over and made it red so you can see it)

- Now union the original elements to get the cut path

- Moving the piece that we broke out using intersection into place

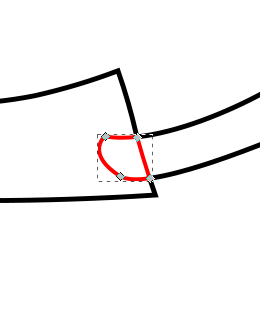

- Here you can see the nodes of the intersected piece

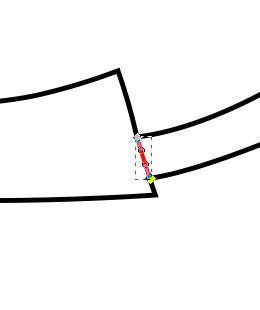

- In this case I don’t actually want the tab to be visible so I remove all segments apart from the one that made up the edge of the letter E

- And here is the finished product

Such a convoluted process really just to get that little line but it really does give a good effect when used throughout. I used a very similar process for overlapping branches as mentioned but here I would end up with little squares making up the intersected part and I’d need to remove opposite edges to show a single branch overlapping the other branch.

Anyway, enough about process and techniques, I’m sure you’ve been waiting to see the finished article, so here it is:

I stained the tree branch layer and used danish oil to finish the letters layer so even though they were both made with Birch ply I still got to differentiate their colours. All-in-all I was really happy with how it came out. Ethan seemed to like it too!

- Letter and tab

-

Year Notes: 2016

So 2016 started quite similarly to 2014 with a new baby in the house. I was fortunate enough that when agreeing to take the job at Axon Vibe I’d had the foresight to request that, in the event that we had another baby, I’d be able to take a 3 month extended parental leave (unpaid). I was so glad I’d done that as it meant I could be much more involved in Ethan’s early days and also give Evie some much needed attention too.

Although I’d been freelance when we’d had Evie, so in theory was more in control of my time, I was also more worried about what money was coming in. Although my extended leave was unpaid I did have the security of knowing I had a well-paid job to go back to. I’m definitely a supporter of extended parental leave, ideally paid, and was able to put this into practice when DoES Liverpool’s admin guy, Seán, had a child last year. We agreed to give him a month’s paid leave rather than the statutory minimum. Obviously as he’s a part-time worker the cost was less to DoES than it might have been for a full-time person, and a month is still a lot less than we would have to give for maternity leave but I did want to make sure we did more than the minimum and was glad that the other directors of DoES agreed.

Someone's chuffed to be a whole 2 months old! pic.twitter.com/MCmV3wEV5n

— John McKerrell (@mcknut) February 3, 2016Looking back it seems like DoES Liverpool’s search for a new home began in earnest around March 2016. We looked at a few places around Bold Street and the Ropewalks, found a reasonable candidate on Victoria Street but then found what we thought could be a great option near the Ship & Mitre pub off Dale Street. It was actually quite a large building, at 8000 square foot over 4 floors, but we were hoping that we might sublet out reasonably large portions of it to other businesses. We took the community on a series of visits and spent some time working out whether we would be able to afford it, but in the end the owners decided to rent it to a charity that they were involved with so it slipped out of our grasp. Following this we got a little jaded and as far as I can recall didn’t really do much more searching until 2017.

I took Evie to MakeFest in the Central Library in Liverpool in June. It was nice to have a day out just her and me and she seemed to find it interesting, though was a bit nervous of some of the costumes from the Comic Con attendees who were wandering around! Makefest is a great showcase of “making” in Liverpool and beyond and has lots of interesting workshops. They’re actually planning for this year’s event so if you’re interested in exhibiting you should definitely sign up. (ahaha! yeah I started writing this in January 2018 so we’ve had two Makefest’s since)

Supporting @lpoolgirlgeeks with Evie at @lpoolmakefest and #LivMF2016 pic.twitter.com/ktrDG3uE78

— John McKerrell (@mcknut) June 25, 2016Jumping to the end of the year we finally come across one of the projects that I wanted to blog about, that triggered me starting on the whole “Year Notes” process! I laser cut some Christmas presents for the kids. It was quite a labour of love taking quite a while to prepare for (given I could only really work on it for minutes each evening) but I was very pleased with how they turned out… for more detail on that see this follow up blog post.

You can tell this laser cutting is important as I've actually bothered with masking tape to reduce scorch marks #weeknotes pic.twitter.com/2FaWvI99e8

— John McKerrell (@mcknut) December 16, 2016 -

Year Notes: 2015

Last year’s notes ended with a cliff hanger, would I take a permanent job at Axon Vibe or would I not? Well the year began straight away with a visit to head office. Flying out to Lucerne in Switzerland on the fifth of January. This was actually my wife’s first day back in work after being off for over a year’s maternity leave. It was unfortunate to have to leave her to sort out our daughter during her first week but I did at least get a later flight so that I could help get Evie to her first morning in nursery.

After much consideration I ultimately decided to take the job at Axon Vibe. I was intending to continue working for them so it’s not like I was looking for other clients, and the money was such that I wasn’t losing out by being a salaried worker. By this point I’d already agreed that I would have my daughter on Friday afternoons so I ultimately decide to work Monday - Thursday but to keep Friday for myself. That would allow me to keep working on my own apps or even doing small amounts of client work on Friday mornings. The view from the terrace (see above) certainly didn’t hurt either!

I wasn’t going to mention too much about what we did at Axon Vibe that year as very little of what we did then has survived. We took some time to work out what our key offering would be, sometimes focussing on business to business applications, and at others trying to develop public facing apps. One interesting thing was that we took on a number of developers and a designer who all worked from DoES Liverpool. By the end of the year we actually had 4 people all working from Liverpool. That was great to see, even if I did find it a little strange sharing an office with colleagues! We also enjoyed a great week in October when we managed to persuade colleagues from Cirencester, Norwich and Edinburgh to visit while we worked on new developments.

Way back in 2011 I’d actually taken over the running of a monthly event that would have geeks meeting up in a local bar on the last Tuesday of the month either just for a social meet-up or to hear someone talking about some technology or, well, anything that happened to interest them. GeekUp was a venerable event that started way back in December 2005 in Manchester. Over time the event became more popular and drew in people from surrounding towns, Liverpool included. I attended my first event in March 2007, gave a talk on OpenStreetMap at the following event and then was glad to attend the inaugural event in Liverpool later that month.

Running for so many years, GeekUp was such a great way for similarly minded folks in Liverpool to connect. Most of my friendships in Liverpool can be traced back to GeekUp, especially if you consider that I would never have co-founded DoES Liverpool if I hadn’t met Adrian McEwen there. While I enjoyed attending GeekUp I found I wasn’t the best at running it. It was easier when we met in a bar as if there was no talk you could easily just chat and have a drink. It’s amazing how a simple schedule of “last Tuesday of the month” and perhaps the odd “Are you going to GeekUp?” on twitter would keep the event going quite well. GeekUp ran for four years meeting in 3345 (now “The Attic”) on Parr Street. On one of the last meetings there we found the room had been double booked (I’m sure with UKIP!) and it turned out we no longer had the booking at all. As we were in the process of setting up DoES Liverpool, and we were planning to host events there, we had a single meeting in Leaf on Bold Street, before moving the event to DoES.

This change really affected the dynamic of the event, it was more difficult to get into the space and when you arrived you found a bland room of desks so it was quite different to meeting in a bar. We actually managed to continue for four more years but I struggled to get around to finding people to talk. Then in 2014 when I did manage to arrange and promote in advance a great line-up of speakers, I struggled to attract an audience! In the end I decided that life was complicated enough without the monthly stress of finding a speaker so 2015 was the year I brought the whole thing to an end. Although I don’t really get time to go out so much these days it does seem like Liverpool is missing a general geeky social meet like this now so it’s a shame there is no GeekUp, but there’s nothing saying someone couldn’t take it on again in the future!

I continued with my running this year too. Living just 4km away from DoES Liverpool it’s actually an easy and fairly short run to get into the office and I’ve tried at times to make it my primary way of commuting. I haven’t managed it often but have a few times managed to run there and back for the four days I would be in the office. No Half Marathon this year but I signed up for the Spring 10K around Sefton Park and managed to beat my personal best of around 46 minutes, I wasn’t too confident as I hadn’t done much speed training but was very happy when I blew almost 3 minutes off my record!

Ok, last tweet on the subject, official results are in, 43:11 :-D http://t.co/Q9bULaos6D pic.twitter.com/o25JdXd2Yb

— John McKerrell (@mcknut) May 4, 2015I tried to take up gardening as a hobby to brighten the place up. I even planted potatoes so that we’d get greenery and useful, tasty potatoes. Though at times the potatoes seemed like they were trying to take over the DoES Liverpool meeting room we didn’t really have a very prosperous harvest and it felt like the time and effort could be better placed!

.@DoESLiverpool potato update: Growing pretty well! Should be harvesting in the next few weeks #weeknotes pic.twitter.com/p1A51gXB3e

— John McKerrell (@mcknut) June 1, 2015DoES Liverpool could still benefit from some more plant life but there definitely needs to be a plan for maintenance for this sort of thing! 🌻

Hm.. anything else happen in 2015? Well we made this little announcement:

Happy to announce that Evie is looking forward to being a big sister! All going well so far. #duedecember pic.twitter.com/iImTotOhFr

— John McKerrell (@mcknut) July 29, 2015As we settled into our new life with work and nursery and with Evie being such a good sleeper we decided that we might actually like to have another little person around the place. Funnily enough while Evie had actually come 10 days late Ethan actually came along exactly on his due date!

I won on the premium bonds today but I think yesterday's prize was better! #itsaboy pic.twitter.com/PXOmL0KpsH

— John McKerrell (@mcknut) December 4, 2015While Ethan seemed originally to be feeding okay we ended up having similar troubles with him losing too much weight and being harassed by mid-wives. After a week of problems we went along to an infant feeding clinic only to be told that Ethan had a tongue tie. A tiny piece of skin was stopping him from being able to move his tongue freely and causing him problems with feeding. Unfortunately our options were limited to waiting 2 months for an appointment in Alder Hey or trying to get it done in Chester Hospital. There was also the option of going private but we really didn’t think we should have to do that and also of the standards of care we’d receive. Being so close to Christmas we were quite concerned about whether we’d managed to get it done before the holidays so we were very happy when we got an appointment for the 23rd December. Poor Ethan ended up picking up a cold meaning the nurse almost couldn’t complete the tongue tie snip, and as it turned out had probably missed some as it really didn’t make much difference to his feeding. It seems ridiculous having to wait three weeks for something that should have been picked up and fixed while he was still in the hospital, and that the much vaunted and well-funded Alder Hey couldn’t do anything about it for months. Obviously we can’t be sure that dealing with it straight away would have reduced the problems, but it would have given Ethan a much better chance and given my poor stressed wife one less thing to worry about!

I feel I should finish on a lighter note though so let’s back-track to October, we’d been invited to a Halloween party and I couldn’t think of what to go as. My wife spotted a Jack Skellington costume in the shop and came up with the great idea of combining it with one of my old Santa Dash costumes, resulting in this great result:

Bit of recycling went on for my Halloween costume pic.twitter.com/bPsG6YcrY0

— John McKerrell (@mcknut) October 31, 2015 -

Year Notes: 2014

So obviously after the events at the tail end of 2013, this year was mostly spent dealing with the fact that we now had a tiny (not so tiny) baby to look after! Work-wise I’d stupidly taken on two new clients just before baby came along so while they were freelance clients who were aware of what was happening, it did mean I had some worries about making sure I could do work for them. In the end one of them tailed away to nothing within a few weeks of the new year and the other was just a week or so’s work that I managed to get in while baby was napping.

In retrospect the newborn stage is actually something of a calm before the storm as they do tend to sleep a lot. We had some issues around baby’s feeding and weight gain which the midwives dutifully freaked us out over but after a few months she did start putting on weight better. In fact once we weaned her onto food, which with perhaps rose-tinted hindsight went pretty well, she started putting on plenty of weight.

The “week’s work” was quite an interesting iPad app and ultimately developed into a continuous 2 days a week. Without going into too much detail it was tourism based and involved having the app open while you were driving a car. It proved quite tricky to develop and test due in part to literally having to go out and drive in a car to get any useful test data. Also my clients were in London and there was times when they would upgrade the app, drive to central London, try to launch the app and find it insta-crashed. The fix was to delete and reinstall, not so easy for a multiple hundred MB app when you’d already gone to the location. With this and another client I’ll get to shortly I learned a lot about the benefits of automated testing, automated smoke testing and continuous integration. The testing tools at the time were not so good as they are now in Xcode but if I had a good method to simulate a drive, and to do smoke tests every time I committed code I could have avoided many of the problems I encountered.

A few months into the year when I was mainly working on some updates to CamViewer and making further small changes to the iPad app I just mentioned I was approached by John Fagan who I used to work with at Multimap. He was wondering if I’d be interested in taking on a full time role at the company that he was working for - Axon Active. I’d not really been interested in full time roles but in fact as this was a foreign company I would be treated more like a contractor, and the money was pretty decent. Ultimately I told John no, only for him to suggest that I might work just a few days a week for them instead. When I mentioned these talks to the people I’d been engaging with on the other app they jumped at the chance to grab my other free days and so I ended up with 5 paid days a week split between Axon Active (3 days) and the other client.

Miserable weather, awesome tunes! - Just posted a 12.40 km run - #RunKeeper http://t.co/F0nJvhTmoD

— John McKerrell (@mcknut) February 12, 2014Looking back on my tweets from the year I see multiple mentions of running. I’ve never really mentioned running on this blog which is quite bad because it’s become a regular part of my life. In fact in 2013 I ran my first marathon, a milestone I forgot to mention in the previous blog post! In 2014 I ran the same race again but found it much more tricky to fit in training around childcare, I mostly did best efforts at the training plan I was using but ended up with a decent result. While in 2013 I managed an awesome 1:40:00 for the half, I managed to follow it up in 2014 with a very respectable 1:43:25. In 2013 I ended up having real issues with my IT band, causing me to limp the last few miles. Annoyingly in 2014 I actually felt much better and had no issues such as this, but then faced a headwind for the same last miles! I could quite possibly have improved my PR if it wasn’t for that.

With Axon Active I was working on a small project they’d been developing around taking in various items of data that would be made available on an iPhone, uploading them to a server and from there deriving information about patterns in the way you live and your future plans that we might be able to help you with. At the time we pulled in location and calendar events and would do things such as suggesting a place you might go to nearby for a quick lunch or let you know about travel options for your calendar appointments.

Axon Active are a Swiss company but most of the people working for them were remote. At the time we had people in France, Edinburgh, London, Brighton, Manchester, even Russia! This allowed me to keep the flexibility of working from DoES Liverpool which was very handy. We would meet every 3 weeks in London for sprint planning and every 3 months for a trip to head office in Switzerland. This worked really well with the new baby, I could choose to work from home or from DoES Liverpool most of the time and the trips to London weren’t too tricky. Having to be away for a week wasn’t so great but it also wasn’t so often. We had plenty of support from my mother in law, Anne, so that made sure I was mostly not leaving my wife home alone with the kid. Also helped that my daughter got really quite good at sleeping from as early as 6 months!

Enjoying the view at the Axon barbecue pic.twitter.com/ZgonV9aqKF

— John McKerrell (@mcknut) April 30, 2014The project at Axon Active was initially just a side-project for an 8 person team but as the year progressed the company really saw the potential of what we were doing and it culminated in a new UK based company being formed at the very end of the year… but that’s really a story for 2015.

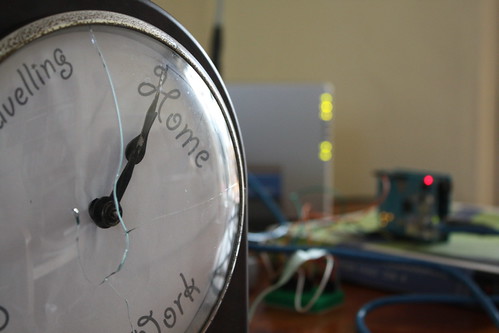

Again looking back at my tweets I see that after some discussions with Patrick Fenner I had him and his wife Jen Fenner, through their company Deferred Procrastination, help me with a new design of the WhereDial. They actually engaged an old friend Sophie Green to prepare some artwork for the device while Patrick and Jen looked at improving the functional design and the production method. They developed a way of using screen printing to allow much faster printing of designs onto the laser cut materials (ultimately screen printing would take seconds where laser engraving could take 15-20 minutes per piece). The resultant WhereDial looked really good and I was quite happy with the results. Unfortunately as I got busier with life and paid work I found I didn’t have the time to progress this so never got as far as selling the new versions. I’ve had them around my house and on my desk over the years and it’s interesting to note that while they do look good, the design is quite busy and the colours quite low contrast so it can be tricky to tell what’s happening from across a room. Something to keep in mind if I ever get around to developing the WhereDial again!

What do you think of the new look WhereDial? See it up close and get kits at #MakerFaireUK @DoESLiverpool stand! pic.twitter.com/SMaeAIll22

— WhereDial (@WhereDial) April 26, 2014The iPad app continued on for much of the year, we found it tricky getting the location based stuff working just how we wanted. We were trying to simulate something that a human would do and the clients had a particular level of quality in mind that was hard to replicate. There was also the need for a sat-nav component in the app, we didn’t want to call out to Apple Maps so would have needed to either build a sat nav ourselves or pull in a third party component. In the end this proved particularly difficult to find for iOS leading them to look at Android as an alternative. With my lack of interest in Android and continuing focus on the Axon Active role we ended up parting ways in Autumn. It was a really interesting app to work on but just had some difficulties that would really have required a lot more development resource than me working on my own. I didn’t hear too much from the clients once I handed the code over to their Android developer and I have a feeling that the project stalled around then.

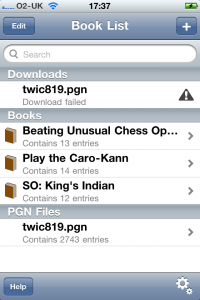

That’s probably it for 2014 really, once that app project stopped I found it useful having two days a week to do more work on CamViewer and also found the time to make some changes to the Chess Viewer app that I’d been working on intermittently. As 2014 drew to a close my wife started to think about returning to work and deciding what hours she wanted to do. Axon Active were also looking to setup the UK company and were offering people full time jobs, me included. I really wasn’t sure that I wanted to make that commitment, my stance hadn’t really changed from the beginning of the year, but I wasn’t really looking for any more work and the money was still pretty decent even as an employee so it was a hard decision to make. I didn’t make the decision until the first week of 2015 so I’ll make you wait to hear!

(Let’s finish up with a photo of me ready for the 5k Santa Dash)

Santa and his slightly concerned reindeer. pic.twitter.com/FanlyQmHSv

— John McKerrell (@mcknut) December 7, 2014 -

Year Notes: 2013

I was tempted to skip this year as I had after all written a blog post that year already, but why not go crazy and write a second one, maybe the 2013 review would have been the first post of 2014 anyway?

Although I tend to write about geeky work related things on this blog it’s really my personal blog so I should definitely mention that the two major events of 2013 for me were personal rather than work related. We (my wife and I) moved house, and got pregnant! The house move wasn’t particularly planned and just came from noticing a house around the corner from ours that looked interesting, checking it out (awful) but then looking at a few more and deciding that actually we really could manage a nice upgrade, and might need to as we’d be needing more room soon.

We were lucky that we could afford a lovely big victorian house, which looks nice and provides lots of room for kids and associated “stuff”, but isn’t the best when it comes to heating and having lots of little jobs that need doing. We actually didn’t move in for 3 months after buying it, but still even now have lots of jobs that need doing and some quite large bits of building work we’d like to do, if we could get around to it (making the cellar a usable space and extending the kitchen). Obviously having lots of jobs to do is standard for owning a house but this place definitely seems to take it to another level!

Also back in 2013 I took on a summer student, Elliot. That was a great experience even if I hadn’t necessarily prepared well enough for having him around. I ended up giving him all sorts of different bits of work to do including upgrades to my CamViewer webcam viewing app and upgrades to the WhereDial. He actually did most of the work towards a Wi-Fi enabled webcam that would have used TP-Link mini Wi-Fi routers as much of the brains with a bespoke Arduino compatible circuit board controlling the motor. Unfortunately in the end I didn’t get around to productising that but hopefully he had some fun working on it and got some good experience. After that summer he went back to university to finish his degree then had no problem finding a job. I haven’t caught up with him in a while but I believe he’s still doing well and applying his great versatility to working on a variety of things from back-end server coding to mobile app dev.

During the summer of 2013 I, with Adrian McEwen, Hakim Cassimally and Aine McGuire exhibited at Internet World trade show in Earls Court, London. That was quite an interesting experience. We were given a prime spot at the entrance to the show and used it to demonstrate a variety of IoT devices including the WhereDial and Bubblino, Hakim and Adrian also promoted their book Designing the Internet of Things. And because spending a week in London wasn’t enough we then spent the following weekend in Newcastle for Makerfaire!

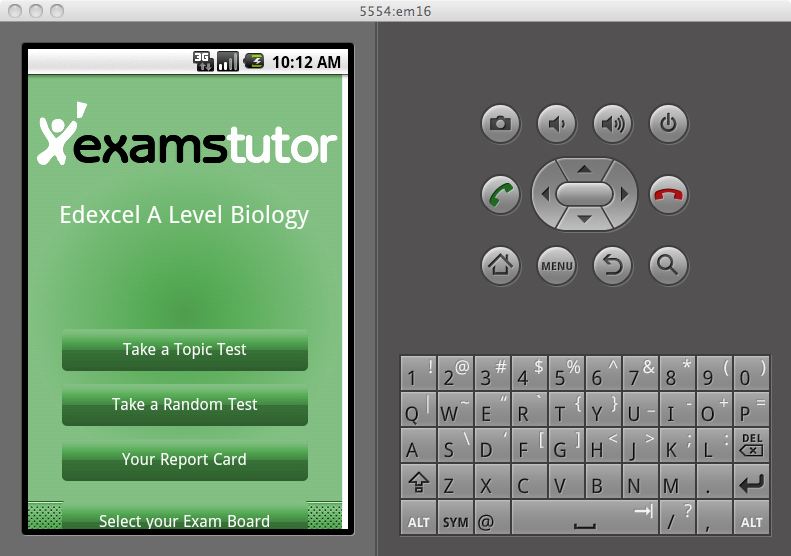

Wow, looking at my notes that was when my relationship with ExamsTutor ended. Unfortunately it didn’t end as well as I’d have liked, they simply decided they did not want to continue the relationship and largely cut off communication. I had owned the IP behind the apps so no further development occurred on those and it looks like they got removed from the app store in Apple’s great cull of 2016 (removing any app that hadn’t been updated in years). A shame to end that way as I’d enjoyed working with them but there wasn’t much I could do once they stopped replying to emails.

My relationship with 7digital also ended this year, as I recall they were looking to take development in-house which was fair enough. I don’t think iOS was ever really a huge priority for them as Apple’s app store rules made it difficult for them to make any money from the app. I know they continued using my codebase for a few years after, it’s hard to tell if they still do, the app’s structure hasn’t changed too much but it’s quite likely it’s had some restructuring under the hood.

As mentioned I (and Elliot) also continued working on CamViewer through that year. Interestingly looking at the Changelog that year seems to have been the start of me adding more functionality to the app. At the end of the year I added support for “HD” cameras that use RTSP by integrating a paid-for library. That was just in time for me to use cameras such as this as baby monitors, something I and my wife have both found really handy over the years.

Pretty much the last thing that happened in this year, Evie was born!

So that’s a review of 2013 done, just 3 more years to cover (assuming I get this done quickly, considering I started this blog post in January (2017) that may not be the case!)

-

New New Blog, New Old Blog

So I’ve decided to replace my previous blog with a WordPress blog.

That was the first line I put on my WordPress blog, which I’ve now replaced with Jekyll. The first post on that was in October 2006, so nearly 11 years ago!

Over the past 11 years I’ve moved away from doing anything PHP, or anything server-side really. I have no particular need of the online editing that you get with WordPress or any of the other features really. I’m also trying to be quite paranoid about what daemons I run on my hosting server. I came across Jekyll (again) as part of a documentation project in my day job and was impressed by how easy it was to use so decided I would start migrating some of my own stuff over.

My first migration was actually the website for my CamViewer iOS app which was already based on Gollum the markdown wiki so should have been quite simple to migrate (actually it was still a bit of a faff due to different markdown versions).

I hoped migrating Wordpress would also be easy as so many people use both technologies. As it turned out there was still plenty of work involved. I ended up using two migration tools. The main Jekyll importer didn’t seem to do a great job of pulling the HTML in but pulled all the comments across nicely so I ended up using exitwp and writing a yaml copying tool to pull the comments from one to the other.

This blog hasn’t actually got any comments functionality at the moment, I figure people can ping me @mcknut on Twitter if they want to make comments but I’ve copied the ones that were on the old site.

My previous blog made use of whizzy fun modern technologies to allow me to host my entire site on Google Base, Google Pages and del.icio.us. Unfortunately, because it was something I had just knocked up there was no comment support, and of course it did require Java and JavaScript to be enabled in the browser.

That original blog was quite a weird thing, I tried to make it so that all the content was hosted online. Unfortunately as it was JavaScript based not much has been saved on the Internet Archive. It does seem like all the original posts are now lost but with any luck I’ve got the content… somewhere.

-

Long time no see

It’s been a very long time since I’ve written anything here. I thought I’d like to write up a small project I did recently, but then there would be a bit of back story, and then a bit more, and oh yes I haven’t blogged in 4 years so I should really do something about that.

As such I’m going to try to write some year reviews. Not promising to make them in depth but it’ll give me a chance to look back and see what I’ve actually been doing all these years (actually it’s pretty obvious to me given that the last post was 4 years ago and a major thing happened just after that but here’s goes nothing..!)

-

Adding a Wiki to MapMe.At using Gollum

I recently added a wiki on my MapMe.At site and found it quite tricky to get working and difficult to find just the right information I needed so I thought I’d write it up.

MapMe.At is still on Rails 2 which seemed to mean I couldn’t install Gollum as part of the site.

I created a separate Rails 3 project that runs alongside MapMe.At and simply hosts Gollum, using instructions from here: http://stackoverflow.com/questions/13053704/how-to-properly-mount-githubs-gollum-wiki-inside-a-rails-app

I wanted it to use the user information in MapMe.At’s session hash so switched MapMe.At to use activerecord based sessions and used information on here to make the rails 2 session load in rails 3: http://www.kadrmasconcepts.com/blog/2012/07/19/sharing-rails-sessions-with-php-coldfusion-and-more/

I’m not actually using the rails2 session as the main session, I just load the information in. I have the following in

config/initializers/session_store.rbmodule ActionController module Flash class FlashHash < Hash def method_missing(m, *a, &b;) end end end end MapmeAtWiki::Application.config.session_store :cookie_store, key: '_mapme_at_wiki_session'Then in routes.rb:

class App < Precious::App before { authenticate } helpers do def authenticate oldsess = ActiveRecord::SessionStore::Session.find_by_session_id(request.cookies["_myapp_session"]) if oldsess and oldsess.data and oldsess.data[:user_id] u = User.find(oldsess.data[:user_id]) email = "#{u.username}@gitusers.mckerrell.net" session["gollum.author"] = { :name => u.full_name, :email => email } else response["Location"] = "http://mapme.at/me/login?postlogin=/wiki/" throw(:halt, [302, "Found"]) end end end end MapmeAtWiki::Application.routes.draw do # The priority is based upon order of creation: # first created -> highest priority. App.set(:gollum_path, Rails.root.join('db/wiki.git').to_s) App.set(:default_markup, :markdown) # set your favorite markup language App.set(:wiki_options, {:universal_toc => false}) mount App, at: 'wiki' endI wanted a wiki on the site to allow my users to help out with documenting the site. Adding their own thoughts and experiences and perhaps fixing typos I might make. I’m not sure that’s really started happening yet but at least I have a nice interface for writing the documentation myself!

-

WhereDial Ready to Ship

A good few years ago I blogged about making a clock that showed location, similar to the clock that Weasley family had in the Harry Potter books. Well now you can buy one! I’ve spent the last year working on the design and getting the hardware ready. Take a look at the photos below and head over to the website for more information on the WhereDial!

-

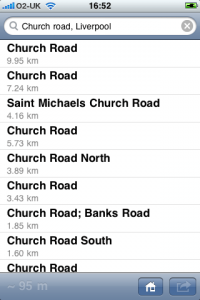

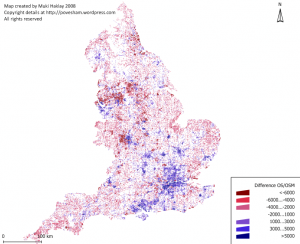

OpenStreetMap at Social Media Cafe Liverpool

I gave a talk last night at Social Media Cafe about OpenStreetMap. I actually haven’t been too involved in the OSM community of late so it was nice to get back into it a little bit. It was also good to find that a large portion of the audience was not already aware of OSM so it was nice to introduce it to people.

You can find the video of Social Media Cafe on USTREAM. The video will be chopped up soon at which point I’ll link to or embed my own talk here too.

I ended up with 63 slides taking up about 100MB so I’m going to try not uploading it to Slideshare this time, instead I’m going to summarise the talk here.

Why do we need OpenStreetMap?

-

Geodata historically isn’t

-

Current - things change so often maps quickly become outdated.

-

Open - if you know the map is wrong, wouldn’t it be simpler to let you update it yourself?

-

Free - You want me to pay how much for Ordnance Survey data?? Especially an issue when you’ve helped build the map.

-

-

Wiki is obvious next step

-

It’s just fun

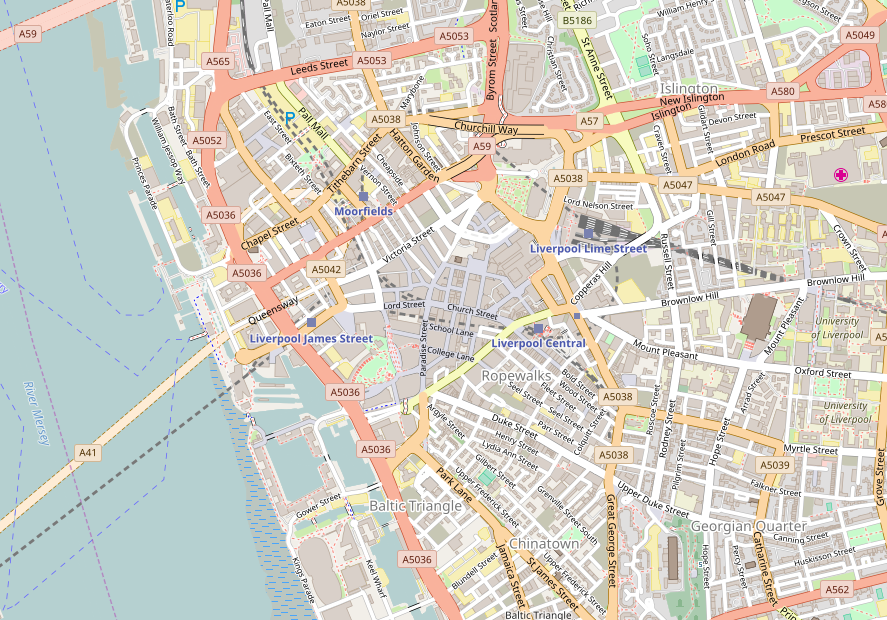

We make beautiful maps…

…which we give away

It’s not just Liverpool, or even the UK, in the talk I showed maps of the Hague, Washington, DC and Berlin. You can pan and zoom the map linked to above to browse the coverage.

Some Quotes

“It’s absolutely possible for a bunch of smart guys with the technology we have today to capture street networks, and potentially the major roads of the UK and minor roads”

Ed Parsons, ex-CTO Ordnance Survey currently Geospatial Technologist for Google

“If you don’t make [lower-resolution mapping data] publicly available, there will be people with their cars and GPS devices, driving around with their laptops .. They will be cataloguing every lane, and enjoying it, driving 4×4s behind your farm at the dead of night. There will, if necessary, be a grass-roots remapping.”

Tim Berners-Lee

“You could have a community capability where you took the GPS data of people driving around and started to see, oh, there’s a new road that we don’t have, a new route .. And so that data eventually should just come from the community with the right software infrastructure.”

Bill Gates

Some big names in technology who clearly think user-generated mapping data is a good idea.

Isn’t Google Free?

A lot of people ask the question “Why do we need OpenStreetMap when Google Maps is free?”

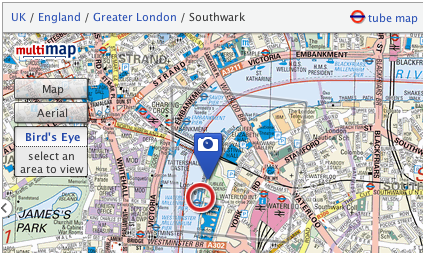

Current?

http://maps.google.co.uk/?ll=53.40407,-2.985835&spn;=0.010937,0.031693&t;=m&z;=16

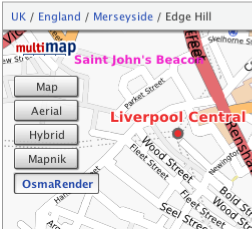

This picture shows a Google Map screenshot that I took on 16th February 2012. In the centre of the map you can see the Moat House Hotel. This was bulldozed in 2005 but still shows up on Google’s map. You’ll also see the Consulate of the United States in Liverpool. This was also closed down some time ago. So you can see that Google Maps isn’t perfectly current (and, for the record, I have now reported these problems to Google).

Open?

Google have launched their own project to map the planet. Map Maker allows people in many countries to edit the data of the map, adding roads and POIs in a similar way to OSM. Unfortunately Google doesn’t then provide full access to this data back to the people who have made it! Map tiles are generated and shapes of the data entered can be retrieved but the full detail of the data is kept by Google. The license offered by Google also restricts its use to non-commercial usage, stopping people who have put effort into creating the data from being able to derive an income from it.

Free?

Though Google’s mapping API is free to use initially they have recently introduced usage limits. Though they claim that this will only affect 0.35% of their customers, it has already affected a number of popular websites that simply can’t afford to pay what Google is requesting. Some examples will be given of these later.

Google Support OSM

It would be unfair to talk about the bad parts of Google without mentioning the good. Google has regularly supported OSM through donations, sponsorship of mapping parties and support through their “Summer of Code” programme.

As do other providers

It also wouldn’t be fair to paint Google as the only supporter, for example:

-

Mapquest sponsors and supports OSM efforts.

-

Microsoft Bing Maps sponsors and supports OSM efforts, even allowing their aerial imagery to be traced.

Workshops

Or, Map as Party (Mapping Parties!)

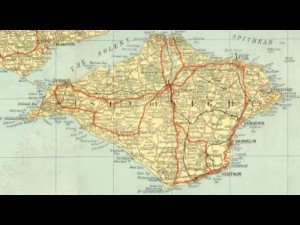

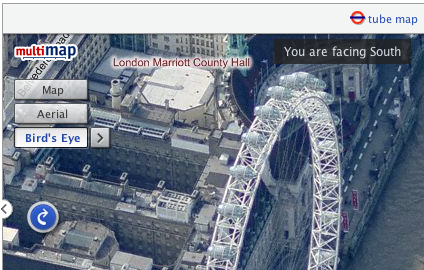

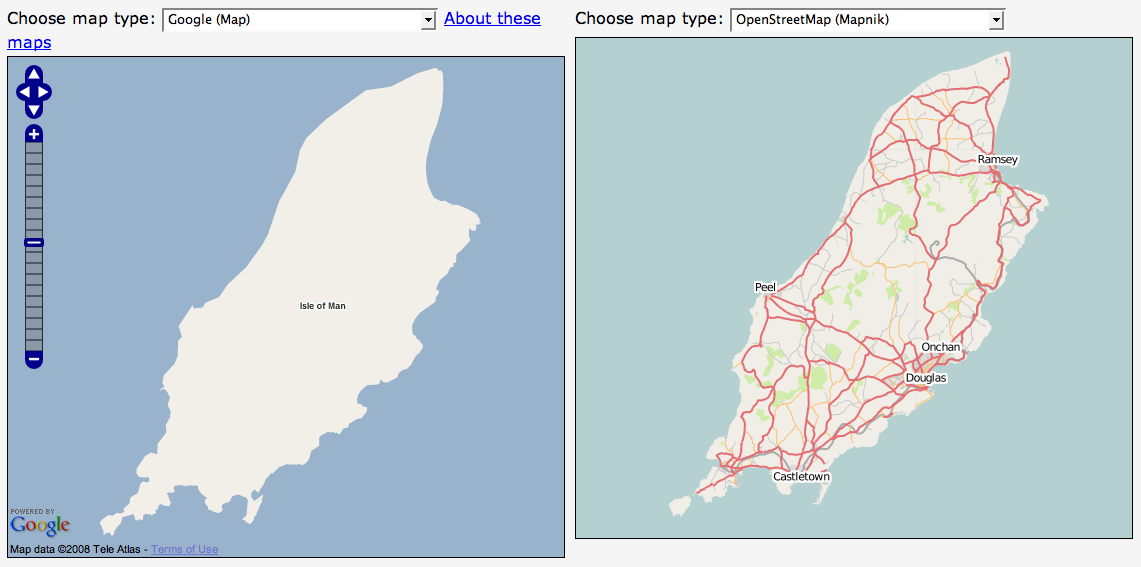

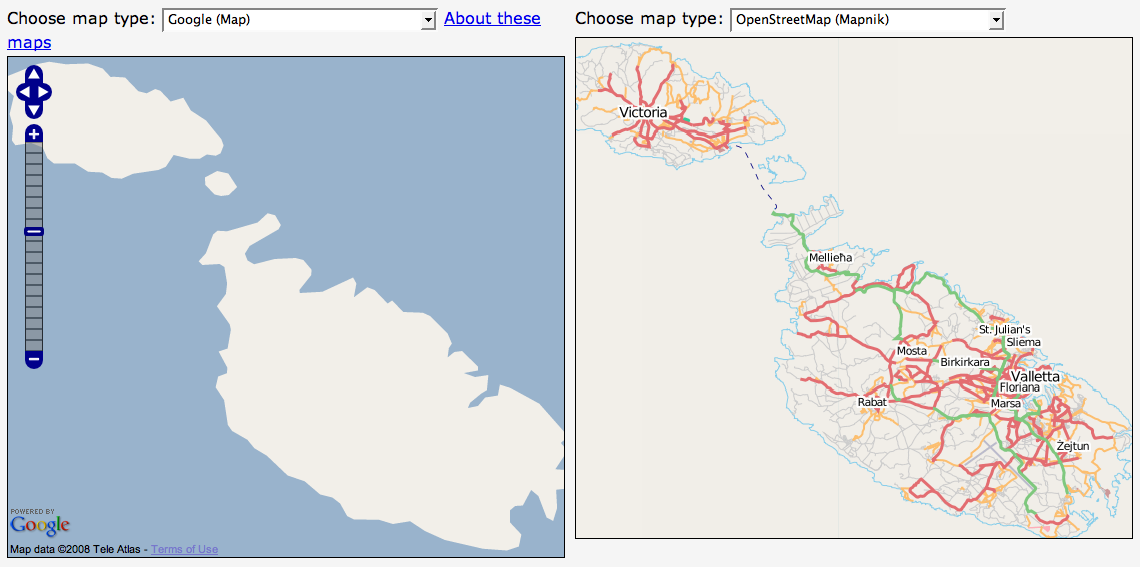

The first mapping party was in the Isle of Wight. At the time the only “free” map data available was an Ordnance Survey map that had gone out of copyright:

A group of people went to the island for a weekend and collected GPS traces of all the roads:

And from these made a great looking map:

We also held a mapping party in Liverpool in November 2007 which allowed us to essentially complete the map of the centre of Liverpool.

That video shows the traces of everyone involved with the mapping party as they went around Liverpool and mapped the streets. It was built using the scripts referenced on this wiki page

Editing OSM

Visit openstreetmap.org and sign up for an account. If you have GPS traces, upload them, don’t worry if you don’t as you’ll be able to help by editing existing data or tracing over aerial imagery.

Data Model

-

Nodes

- Single point on the earth - Latitude and Longitude

-

Way

- Ordered list of nodes which together make up a long line or an enclosed area

-

Relation

- A method of relating multiple ways and nodes together, e.g. “turning from way A to way B using node C is not allowed”

-

Tags

-

Nodes/Ways/Relations can have key=value pairs attached to describe their properties.

-

Example node tags:

-

amenity=place_of_worship, religion=buddhist

-

amenity=post_box

-

-

Example way tags:

-

highway=primary

-

oneway=yes

-

-

An online flash editor is available (Potlatch) simply by clicking the “Edit” link when looking at any map on OSM. An offline editing desktop app built in Java is also available, JOSM

There are hundreds of tags that you can use to describe almost any data, use the wiki to find more information especially the Map Features page.

License

CC-BY-SA

This license lets anyone use the OSM maps for free so long as you mention that the source was OpenStreetMap and you share what you produce under a similar license.

Very soon the license will change from CC-BY-SA to Open Database License which offers similar freedoms with more suitable legal terminology. Do read into it if you think it will affect you.

OSM in Action

Nestoria, a popular property website, has long supported OSM. A few years ago they made use of OSM data by using the maps generated from the Isle of Wight mapping party to replace the non-existent data in Google Maps. More recently they have been affected by Google’s plans to charge for its map data and so they have switched fully to OpenStreetMap data and maps.

CycleStreets is a great website for finding cycle routes. They offer a directions engine that gives detailed descriptions of routes, allowing you to pick between Balanced, Fastest and Shortest routes. They also offer lots more information and a database of photos to give more insight into a journey. The routes they recommend are ideal for keeping cyclists off the busy dangerous roads and onto the quieter safer more direct routes.

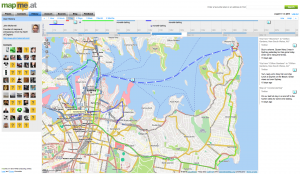

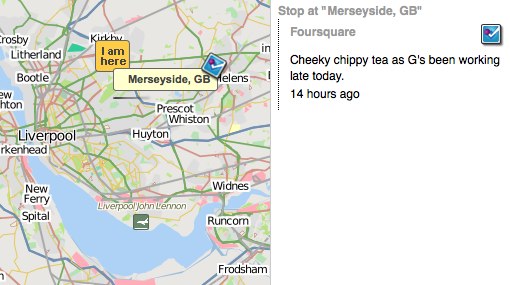

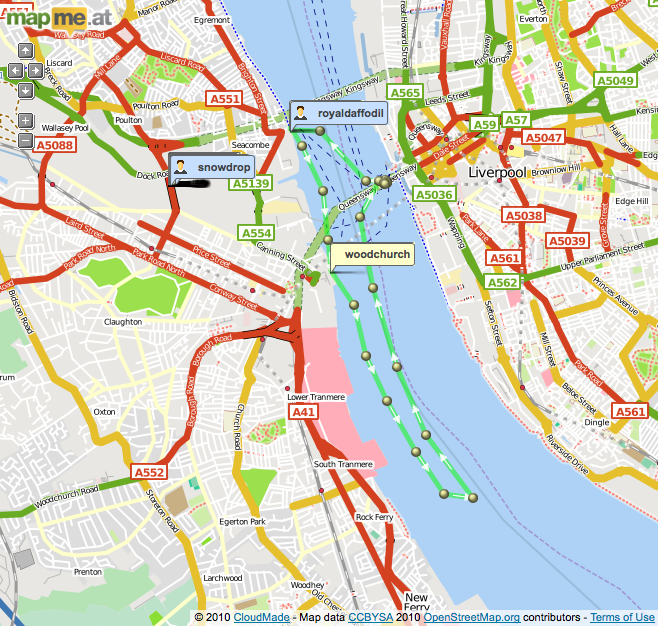

mapme.at is a website that I have built for tracking people’s location. People use it to track places that they visit and journeys that they take. I use it to track everywhere I ever go. Adrian McEwen wrote a script that puts the location of the Mersey Ferries into mapme.at and that’s what you can see in the map above.

A few years ago I worked with ITO World to create some animations of my data. They created great animations which you can find on my vimeo account but below is one showing every journey I took in January 2010 with each day being played concurrently.

All travels in January 2010 run at once. from John McKerrell on Vimeo.

Geocaching is a popular pastime based around GPSes, treasure hunting and maps. Their website used Google Maps and they also had issues when Google started to charge. As a result they have switched to OpenStreetMap too.

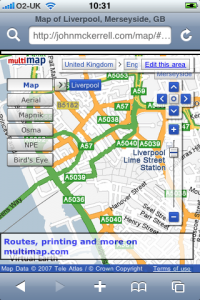

Mobile

Lots of mobile apps are available to let you use and contribute to OpenStreetMap

Android

Not being a regular user of Android I can’t recommend any apps personally but there is a large list of OSM Android apps on the wiki and I’ve selected the following based on features they claim to offer.

gopens and MapDroyd both allow you to browse OpenStreetMap maps on the go and claim to offer offline support, allowing you to view maps even when you’re not connected to the internet.

Skobbler Navigation provides a full Tom-Tom style satnav for navigating on the go, all based on OpenStreetMap data.

Mapzen POI Collector is a handy way to collect POI data while out and about, or to edit existing data.

iPhone

Skobbler Navigation is also available for iPhone, again providing a full Tom-Tom style satnav for navigating on the go, all based on OpenStreetMap data.

NavFree is another full satnav app based on OpenStreetMap data.

Offmaps is an OSM map viewer that allows you to download large chunks of map tiles in advance so that you have them, for instance, when you go on holiday. I would recommend the original Offmaps over Offmaps2 as I believe the latter restricts the data you can access.

Mapzen POI Collector again is available for iPhone and is a handy way to collect POI data while out and about, or to edit existing data.

Humanitarian

OpenStreetMap has been heavily involved in Humanitarian efforts, these have resulted in the formation of HOT - the Humanitarian OpenStreetMap Team. Projects have included mapping the Gaza Strip and Map Kibera a project to map the Kibera slum in Nairobi, Kenya. These projects have many benefits to the communities involved. Simply having map data helps the visibilities of important landmarks: water stations, Internet cafes, etc. Teaching the locals how to create the maps teaches valuable technical skills. Some people build on the data to provide commercial services to their neighbours, building businesses to support themselves and their families.

A hugely influential demonstration of the impact of OpenStreetMap involvement in humanitarian efforts occurred after the massive earthquake that struck Haiti in 2010. Very shortly after the earthquake hit, the OSM community realised that the lack of geodata in what was essentially a third world country, would cause massive problems with aid workers going in to help after the earthquake. The community responded by tracing the aerial imagery that was already available to start to improve the data and later efforts included getting newer imagery, getting Haitian ex-pats to help with naming features and working with the aid agencies to add their data to the map. You can see some of the effects of these efforts from the video below that shows the edits that occurred in Haiti around the time of the earthquake.

OpenStreetMap - Project Haiti from ItoWorld on Vimeo.

Switch2OSM

If all of this has piqued your interest then visit openstreetmap.org to take a look at the map, sign up and get involved in editing. Find more information on the wiki at wiki.openstreetmap.org or find out how you can switch your website to OpenStreetMap at Switch2OSM.org

-

-

YAHMS: Revisited, Upgrading to XBee with Wire Antenna

A good few months ago I blogged about my YAHMS project and my YAHMS Base Station. It was a funny time to get the project complete, just in time for the summer, but it has ended up being useful. Being able to turn the hall light on when we come home in the dark is really useful and, with the summer we’ve had, the heating has gone on from time to time too.

It hasn’t been without its problems, though fortunately they have been relatively few. Power ended up being an issue for the temperature probes, using four AA batteries would keep the probes running for about a week. This isn’t bad but would mean replacing them regularly, also when the voltage went low, the voltage detector didn’t seem to work and I would see rising temperatures. In the end I decided to use some wall wart power supplies, unfortunately that means the probes are less portable and also means that half of the circuit was unnecessary, as the wall warts never provide a low voltage.

I’ve also had problems with the Base Station falling off the network after around 5-7 days of use. There was originally a problem with the DHCP lease expiring so I made some changes to make it re-request the lease when it was near to expiring. This didn’t entirely fix the problem so to be sure I switched to a fixed IP. This led to the 5-7 day uptime, I’m not really sure what is happening here, I’m guessing some memory usage problem whereby there are not enough resources left to create a new Client object but I haven’t been able to track it down. When the problem occurs the system still manages to turn the heating on and off at the right times so it isn’t entirely useless, but it does become unresponsive to new settings and won’t send back data either.

Final problem, and the one I’m going to fix today, is that the XBee modules sometimes have issues transmitting data to the base station. I originally wanted to have one upstairs but couldn’t get that to work at all. I ended up putting that one in the conservatory to get “outside” temperatures but it still has issues quite often and has to be positioned right by the door to even sometimes work.

A few months ago I was approached by someone from Farnell who wanted to know if I would like to receive hardware to review on my blog. The arrangement is that they will send me hardware in exchange for a review on my blog and a link to the product on their website. So, here goes…

XBee Wire Antenna Module (Series 1)

Previously I’ve used the chip antenna versions of the XBee series one modules. These were great and really simple to work with but when I tried to use them in a network around my house I had real issues trying to get the signal to pass through walls and ceilings/floors. The best solution would likely be to replace all of the XBee modules with alternatives with better antennas or with more powerful radios. To replace all of the modules would be quite expensive so I’ve decided to go with replacing just the module on the base station and hope that it does a better job of receiving the signals sent out by the other modules. This may be foolish but I’ll give it a go and see what happens.

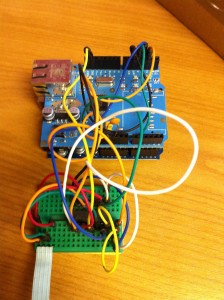

To replace the module should be a pretty simple job, it’s the same format as the chip antenna module so physically I just have to switch it out for the new one. Before I can do that though requires that I send some settings to the XBee to configure its ID and PAN ID as mentioned in my post about the temperature probes. To communicate with the Xbee I’m just going to use an Arduino Uno and wire the Din/Dout pins on the XBee to the RX/TX pins on the Arduino. I then load a blank sketch onto the Arduino and use the Serial Monitor to communicate with the XBee. What I’ll be sending to it is:

ATMY=0,ID=1234Which broken down means:

-

AT _ - Attention!_

-

MY=0, _ - the ID of this unit_

-

ID=1234, _ - the PAN ID for the network of XBees_

To send those settings open your Arduino software, choose the right serial port in Tools -> Serial Port then open the serial monitor with Tools -> Serial Monitor. Choose to send no line endings from the drop down at the bottom and send

+++then as quick as you can manage change to Carriage Return in the drop down first and send the above AT command. After the+++you should seeOKand after the AT command you should seeOKOK.You must also send an

ATWRcommand too to write these settings to the flash memory.Once I did that I started seeing random bits of binary data appearing in the Serial Monitor. Fortunately this was a good thing, it was the temperature data from my probes starting to show up!

I popped the newly configured module into my YAHMs hardware and waited to see if it worked…

As mentioned it was already receiving data so I started to see temperatures show up straight away. The conservatory probe was working straight away which seemed positive as it had issues before. When I took it upstairs it didn’t seem to work at first so I tried angling the box so that the chip antenna would be “pointing” at the base station. This seemed to do the trick and I started collecting temperature readings. Unfortunately as time has gone by I’ve found that it works less and less. I’ve now moved it closer to the base station which got me a few more readings but again it has stopped working. It looks like I’m gonna need a bigger

boataerial!Hopefully I’m going to be able to try out an XBee with an external aerial which should work well on the base station, by this point I’ll have a few spare XBees, including one with a wire aerial so I should be able to get a much bigger range of readings.

-

-

Reversing the Brain Drain

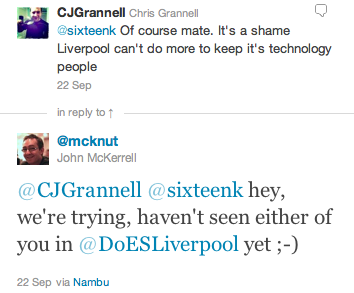

An unfortunate tweet from a friend yesterday suggesting that he might have to leave Liverpool to get a job, led to a little exchange:

That got me to a realisation:

Now that comment is certainly meant to be taken as tongue in cheek, but there’s some very real truth in it. To start with the more tenuous examples. Paul Freeman (@OddEssay) contracts for a company in Eccles, near Manchester. Since setting up DoES Liverpool Paul has been able to spend more time in Liverpool. Yes he’s still working for a company in Eccles but he’s now buying lunch from Liverpool companies and engaging with the Liverpool tech community more regularly.

Let’s move onto Paul Kinlan (@Paul_Kinlan). Paul is a Developer Advocate with Google. He’s based in their London office but also spends a lot of his time travelling around Europe and the world promoting Google Chrome and HTML5. His main reason for being here is to work with developers who are using Google products in the north of England, but by supporting DoES Liverpool and taking a desk with us Paul is now able to spend more time in Liverpool meaning he gets to spend more time with his family who are still based up here.

Finally we have Andy Hughes (@andyhughes86) and Andy Powell (@p0welly). They work for a company in Manchester who had offices in Stockport. Andy and Andy are both developers who worked in an office full of sales people. These were people who spent most of their time on the phone trying to make sales. Not the best environment for a pair of developers who need to get their head down and concentrate. Their company was moving into an office in Central Manchester, a smaller office in a trendier area which was going to mean Andy and Andy would either be in closer quarters with their noisier colleagues, or have to find somewhere else to work. Fortunately they came across DoES Liverpool and came in for their free hot desk day (bringing donuts!) They liked what they saw and took two permanent desks with us. Andy H lives in Runcorn, Andy P lives in New Brighton. Andy H unfortunately still has a long bus ride (but we’ll come back to that) while Andy P now has a 20 minute commute! As it turns out, Andy H is now moving to Liverpool. I had a chat with him recently and asked if he was planning to move to Liverpool before they joined us at DoES Liverpool. He told me that while he really wanted to move here he had resigned himself to living in Manchester. So not only do we have two people who are regularly coming to Liverpool and supporting businesses in the city centre, we’ve also got someone who would have had to live elsewhere being able to live in Liverpool as he’d hoped, paying taxes and again supporting local businesses.

So this blog post certainly isn’t meant to suggest that other places are bad. Generally at the moment though if you want to work for a digital company in Liverpool your choices are to join a digital agency or go it alone. If you don’t want to do this you’ll probably need to work elsewhere. I also recognise that in all of the examples given the person is still working for a company based outside Liverpool. It’s nice though to recognise that even at this early stage DoES is supporting local people and even changing their lives for the better. Hopefully in the future as people start building new businesses based out of DoES those businesses will expand and will start recruiting more and more people from the city who will no longer have to leave just to find a good job.

We also got some more news coverage recently, on Friday getting in the Daily Post for hosting the OpenLabs Developer Breakfast events, and an article 2 weeks ago that we only heard about because someone came into DoES for a look around, as a result of seeing it in the Metro. As it turns out that one was syndicated so we were mentioned all over the place!

-

Another Catch Up, Week 157

A month since my last update, I felt I had to post an update today though as it is in fact the third anniversary of the formation of my company, MKE Computing Ltd!

I’m intending to post a bit of a retrospective sometime over the weekend but thought I’d better update on the general stuff first.

Oh, one other housekeeping note, it seems that for the last few months of updates I’ve managed to mess up the week notes numbers, I first randomly added 3 weeks and then accidentally added 10! This week’s update number was actually lower than the previous one a month ago. I’ve gone through the past updates and updated them to make sure they’re right.

The biggest thing I’ve been “working on” recently is DoES Liverpool. In my last blog post I mentioned that we’d been finalising details of the bank account and the venue. We moved into our space shortly after, spent an intensive week decorating, cleaning up and laying out the space and opened to the public on the 18th July! We’re really happy with how the space has turned out, we’ve got a lovely big open workshop space, a cosy little office space for more intensive working and concentration and then a bright meeting room for when people need a private area or are holding small events. Everyone that has visited so far has been really impressed with our set-up and it’s regularly been compared to a Manhattan loft, we do have a great view onto the gorgeous 1880s built Stanley Building.

Since opening we’ve had various people coming through the doors, we had an open day on Friday 22nd which saw us get two write-ups in the Daily Post and this week we welcomed in another “permanent” desk user (as opposed to the various hot-deskers). Take a look at the website for more information about co-working, hot-desking and workshop space at DoES Liverpool though there’s a few more posts up on the website for the Maker Night regular making events in Liverpool. (The DoES website is actually brand new so you may not see it yet while the DNS propagates but it’ll be there soon)

Besides DoES I’ve actually been relatively quiet for client work. This has been useful for getting things done on DoES but I definitely need to start getting my hands dirty with code again. I’ve started looking at making some upgrades to my CamViewer iOS app and there’s a few more client apps that should be kicking off soon.

I’ll leave it there for the update but hopefully I’ll get my “year notes” up soon to give a retrospective of what I’ve done in the first 3 years of MKE Computing Ltd.

-

Catching Up, Week 152 (was 165)

Long time since an update but there’s some important things going on so I wanted to get up to date.

In the weeks after my last update I continued to work on the Colour Match app for Crown. I’ve added various features to try to help users find the right colour better including adding in a new set of colours that need to be mixed on-demand, and then a set of colour palettes to let you browse the colours similar to the one the app has selected for you. I submitted that app last Thursday and Apple approved it around Tuesday of this week so that’s now available from the app store.

I then went on holiday for two weeks! Apart from a really crap start to the holiday courtesy of US airways and a cancelled flight meaning we were 24 hours late into our destination we actually had a really good time. We started our holiday in San Francisco, eventually having 2 full days there. This was actually my fourth trip to San Francisco but my wife’s first so it was nice to be able to take her around and show her some of the sights I’ve previously enjoyed. The first day was spent within SF doing a bit of shopping, visiting Chinatown and then over to Fisherman’s Wharf to do a boat cruise under the Golden Gate bridge (a first for me actually). On the second day I hired a car (an hour spent in a queue, thanks National!) and we explored a wider area. We drove into the Presidio and I took a photo of my wife with the Yoda fountain at the Lucasfilms HQ. We then continued on to the Golden Gate bridge, we drove over and parked at the viewing spot on the other side to take a photo, we then drove back over and to Baker Beach for a few more photos. The weather wasn’t really beach weather, more gray and cool, but it was still nice to see and take a few photos.

After this we went onto the Golden Gate park. I was originally looking for the Japanese Tea Garden but we ended up getting lost and ending up at the rose garden. This was nice enough to walk around so we had a look and I took some photos. From here we headed south, I was aiming to take the coast road but unfortunately I relied on the GPS too much and it took us mostly on a boring highway to our next destination - Half Moon Bay. The weather still wasn’t really playing ball so we didn’t spend too much time here but drove a short distance north to visit Barbara’s Fish Trap. This is a great little fish restaurant that I found last year (with a WhereCamp friend John Barratt). We both had a mixed seafood selection consisting of a big selection of breaded seafood delights. In the evening we headed back into town to join the WWDC2011 European developer gathering. Unfortunately I hadn’t managed to get a ticket for WWDC (but can you imagine how annoyed I would have been missing the first day due to cancelled flights?!) so it was good to be at least a little involved. I enjoyed a nice micro-brew beer and a good chat with Dave Verwer of Shiny Development and the European Apple developer liaison David Currall.

After San Francisco we flew to Detroit to see friends. This was a great relaxed time, it was good to catch up with friends and meet their new 9 month old twins! We didn’t get up to much while we were there but we did go on a quick trip into Detroit to see Michigan Central Station and even drove over to pay a quick visit to Canada on the other side of the Detroit river. On our last day we made the two hour trip up to see our friend’s grandparents who live on the shore of Lake Superior. They were actually on the shore of Saginaw Bay, so not the main part of the lake, but it was still huge and an impressive sight.

After Detroit we carried on to New York City. We stayed near Times Square and did all the tourist things including visiting Liberty and taking in the view from the top of the Empire State Building. There’s been a few links dotted through this post but if you want to see all my photos take a look at my set of America photos over on flickr.

So, finally, week 165. Just before I went away we signed the paperwork to register a new Community Interest Company - DoES Liverpool. The idea behind DoES Liverpool is to create a space in which people can come together, can use a workshop to design and build products, can co-work on desks and ultimately can build businesses. Much of this week has been taken up with organising paperwork for the company and the bank account and finalising details of the venue. If you’re interested in hearing more about DoES Liverpool then be at GeekUp on Tuesday in Leaf on Bold Street from 6:30pm to hear all the details.

-

Launching Bubblino and Client Apps, Week 146 (was 159)

I spent most of this week updating the Crown “Colour Match” iPhone app that I’ve previously made a few small changes to (I had nothing to do with the original codebase on this app). There was a few false starts while I waited for the graphics for the app, there was a few issues getting them through in just the right formats for the low and high res iPhone screens, but once I’d got them I did manage to make some headway.

The other thing I did was to launch the new version of Bubblino & Friends. This new version offers some great new graphics, new animations and audio that has been created just for the app. I’m really happy with the app and have enjoyed using it to follow some hashtag searches myself but I have to say that sales have actually been quite low since it launched, lower than I’d like anyway. I think that it’s not clear to people why they need an iPhone app to help them use twitter’s search features. As I say I’ve enjoyed using it but, as ever, people are just never sure about putting down that 59p/99c for something they don’t know they need. Hashtags are in use so much though, from the Eurovision Song Contest Final today (#eurovision) to episodes of The Apprentice (#apprentice OR #theapprentice) people are hashtagging tweets all the time and I do hope this app will become a great way to consume these. I can think of a few things that could improve the experience including finding a way to see more of the tweet text (without, of course, hiding Bubblino away!) with one obvious thing being an iPad version. I’ll be listening carefully to any feedback I get and pushing updates out as soon as I can.

Finally, this week we held two of our Maker Night hacking evenings in the Art and Design Academy of Liverpool John Moores. With a 7-9pm session on Wednesday and a 6-10pm session on Friday we all made lots of progress on the various projects we’ve been working on. On Wednesday I got more involved in the Cupcake 3D printer we’ve been building so that I could spend Friday looking after it (as Ross Jones who has led the build so far couldn’t make it). Friday night’s event was actually part of a wider event going on in Liverpool called Light Night with events occurring all over the city. This was great as it meant we had lots of visitors coming down and finding out about what we were doing and looking at our mini-exhibition of hardware hacks (we had my location clock versions 1 & 2, Mycroft’s Radio, Bubblino and an Internet connected temperature guage). While it was great to tell the visitors about what we were up to it did delay my work on the 3D printer! We eventually got the final few bits done and everything wired up and managed to test the plastic extruder and the platform stepper motors that move everything around. While it was great to have some success I’m afraid the most we managed to “print” was things like this. Next month’s Maker Night should be great as finally the first real objects should be printed, just a shame I won’t be there to see them! If you’re interested in going, head over to the Maker Night website.

-

YAHMS: Base Station

Bit of a delay as I’ve been busy with other things but in this post I’ll be completing the set of YAHMS hardware by discussing the base station hardware and software. The base station has a few jobs to do in my YAHMS setup:

-

Physically connect to the relays via digital output pins.

-

Download the config for digital output pins and then control them.

-

Receive the XBee signals from the temperature probes.

-

Take samples from an on-board temperature sensor.

-

Submit samples received locally and via XBee to the server.

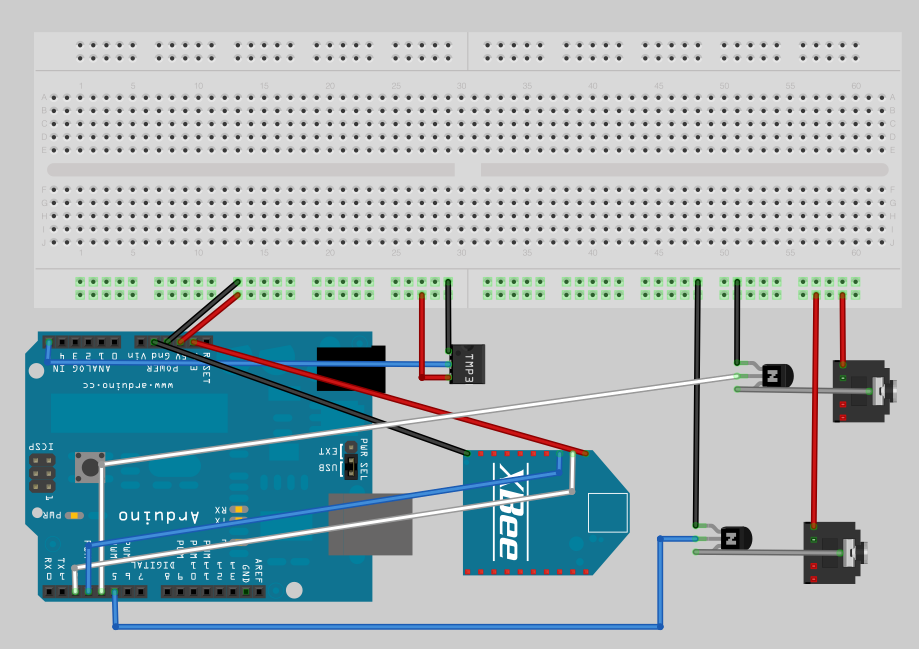

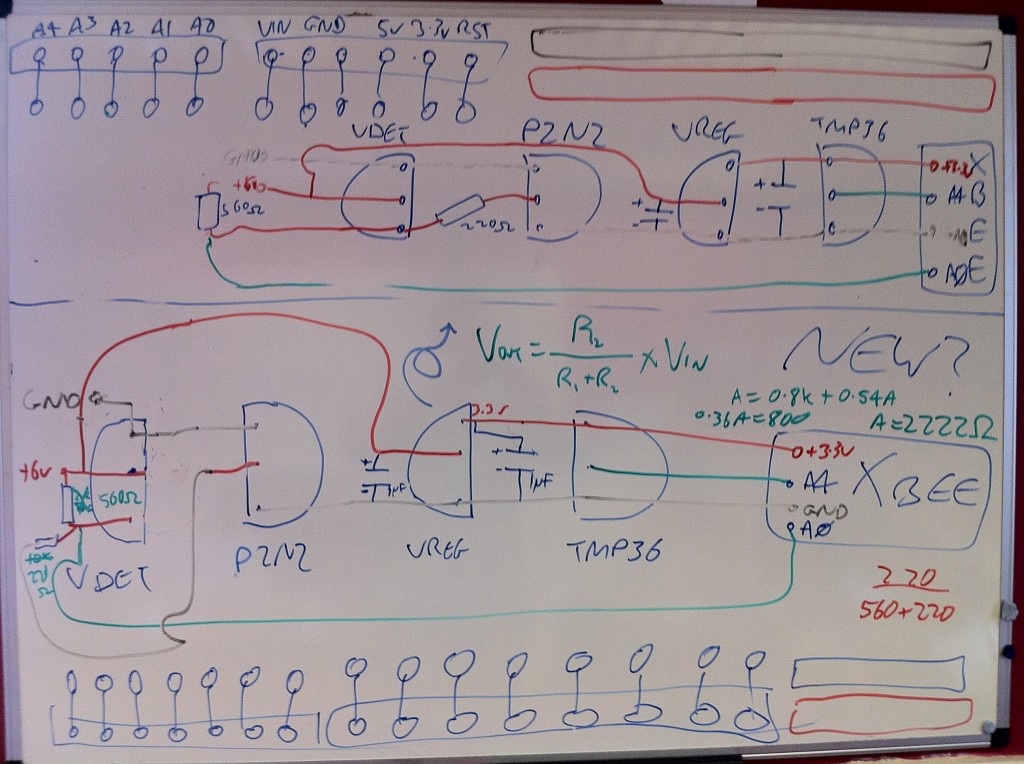

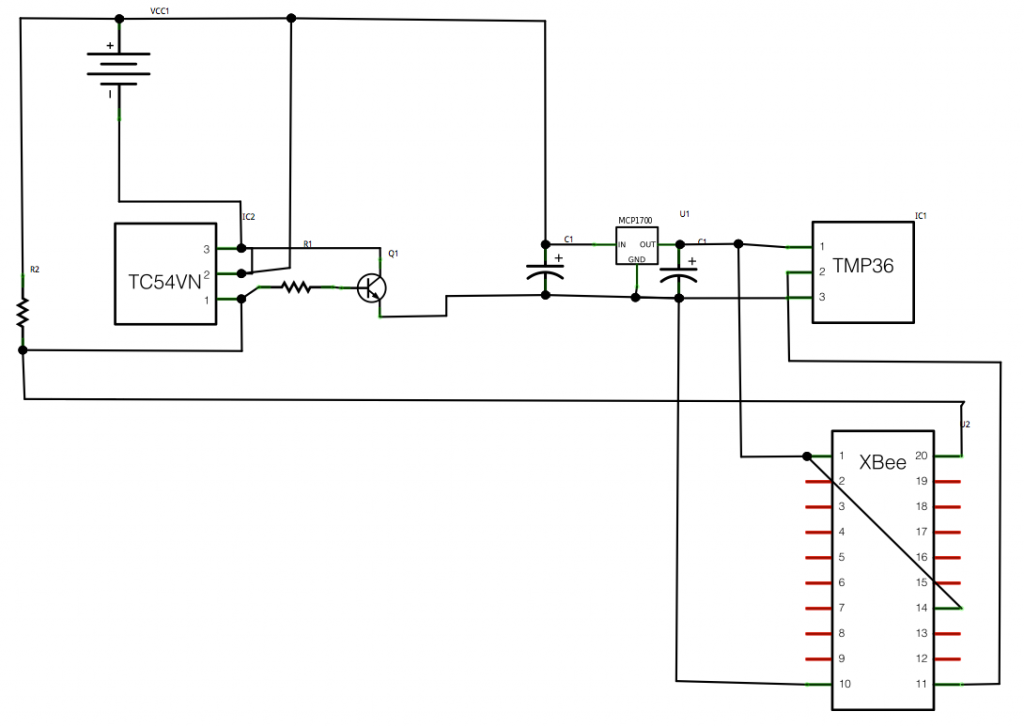

The circuit for this is fairly simple though, just connecting up some inputs and outputs. I mentioned connecting an XBee to an Arduino in the temperature probes post, we simply need to connect the DIN and DOUT pins up to the Arduino so that it can receive the information (and of course the power and ground wires). Because I like to use the main serial interface on the Arduino for outputting debug information back to the computer I’ve gone with connecting to pins 2 & 3 and using the NewSoftSerial which essentially means the serial interface will be provided by software. This isn’t ideal as in theory it means you’re more likely to miss data as it comes in, but the latest software serial drivers largely get around that issue by being interrupt driven. So I end up with the XBee power and ground going directly to one of the grounds on the Arduino board and the regulated 3.3V output, and then pin 2 (DOUT) on the Xbee is plugged into pin 2 on the Arduino and pin 3 (DIN) goes to pin 3 on the Arduino.

I have two NPN transistors turning on the relays (using transistors so that the magnet in a coil relay won’t cause a burst of current draw to the Arduino digital pin) which are plugged into two 3.5mm audio jacks. I have 5VDC power going onto the tip of the headphone jack, the sleeve connection of the jack then goes to the collector on the transistor and the emitter of the transistor completes the circuit by connecting to ground. The base of the two transistors go to pins 4 and 5 respectively on the Arduino.

I also decided to add a temperature sensor onto the board just because I had lots handy and to make sure that I had something to sample locally. The TMP36 is wired to the 5V power supply and then the VOUT goes to A5 on the Arduino to sample the temperature. See my temperature probe post for more details on TMP36s.

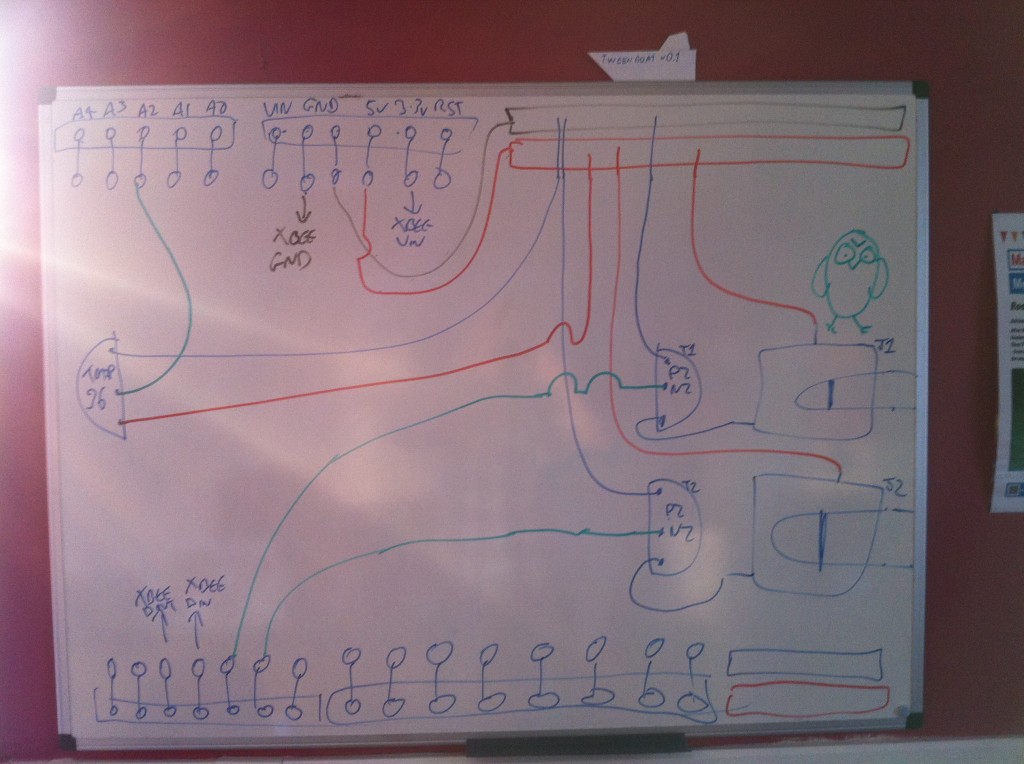

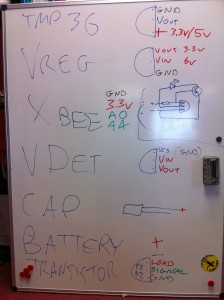

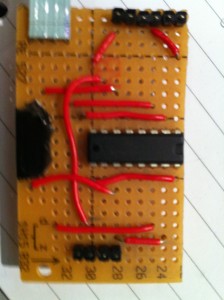

Here’s a picture of my original circuit diagram:

And here’s a fritzing version of that diagram:

And here’s a photo of the finished product:

The board I’ve used here is a prototyping shield that I got from @oomlout at MakerFaire. Being a shield it’s really handy and has two sets of connected track on the ends allowing power distribution. I couldn’t find a part for it in Fritzing so I’ve just used the breadboard piece and tried to arrange the parts in the diagram in a similar way to how they ended up, but with more useful spacing.

The board I’ve used here is a prototyping shield that I got from @oomlout at MakerFaire. Being a shield it’s really handy and has two sets of connected track on the ends allowing power distribution. I couldn’t find a part for it in Fritzing so I’ve just used the breadboard piece and tried to arrange the parts in the diagram in a similar way to how they ended up, but with more useful spacing.I had a few small physical problems with the board when it was completed. The pins on the headers on the prototyping shield are note long enough for it to properly stand above the RJ45 jack on an ethernet shield. You can kind-of push it down so that all the contacts are made but it’s not quite ideal. This also means that the RJ45 shielding will short circuit any connections it’s butting against on the prototyping board. That caused a bit of a debugging nightmare for me at first until I realised and applied some insulation tape! Things fit well if you put the ethernet shield on top of the prototyping shield but unfortunately the ethernet shield then didn’t work, I assume because it needs the ICSP connection.

The hardware here is pretty simple but I’ll do a parts list as usual for completeness. Thought I’d also include this cheatsheet which I drew on the second whiteboard in our office:

It’s worth noting that as YAHMS is completely configurable you can actually have whatever circuit you want using any of the digital output pins or Analog input pins, and choosing whether to use the XBee or not, my circuit is only really shown as a guide. In the future I intend to add support for digital inputs too.

Once that was all done it was time to write some software. As I mentioned in the first post I’ve actually open sourced the software for this so you can grab the source code for YAHMS from github and extend and fork it as much as you want. Unfortunately at the moment it has a few non-standard dependencies. The main thing is the new version of the Ethernet libraries that Adrian McEwen (@amcewen) has been working on. These will be part of an Arduino build in the near future but for now you can try getting them from his github fork of the Arduino environment. They’re really useful though as they provide DHCP and DNS support meaning no more fixed IP addresses! I’m also using a HttpClient library that Adrian has also written. This isn’t actually available properly from anywhere yet (but keep an eye on his github repositories!) so I’ve linked to a zip file of the version I’ve used below, that also contains a few other libraries that it uses. The final custom library is a version of this XBee Arduino library that I’ve hacked to support NewSoftSerial instead of just the standard Serial interface, see the links below for that too. You’ll also need NewSoftSerial of course and the Flash library which I’ve used to decrease memory usage. Follow the instructions in

patching_print.txtto patch the system Print library to support the Flash objects.If you manage to get through the rather complicated compilation process for YAHMS you’ll find that you just need to edit the MAC address in YAHMS_Local.h and you’re ready to go. In theory you should not have to configure anything else locally once I have yahms.net working fully. Once running on an Arduino that sketch will retrieve an IP address via DHCP, synchronise the time using NTP and will then attempt to retrieve the latest config for the MAC address from

yahms.netyahms.johnmckerrell.com (yahms.net does work but seems I’ve forgotten to update the source code). Currently there’s no way for you to put your config into yahms.net but hopefully I’ll get that up soon enough. Until that point you can edit YAHMS_SERVER in YAHMS_defines.h and use something on your own system.Config is requested by a HTTP GET request to a URL like the following: