-

Where 2.0: Modeling Crowd Behavior

Paul M. Torrens

Why would you model crowds?

Important factor of modern life. Some of the most important tipping points. Evacuation, emergencies. Understanding how crowds work is important for public health. New forms of mobs.

“We [really] do not know as much about crowds as we would like to know.”

“Simultation can serve as an artificial laboratory for experimentation in silico”

“I build complex systems of behaviourally-founded agents, endowed….

“What does this have to do with geospatial technology?”

“Business opportunities for Geographic Information Technologies”

Way to do it is convoluted, build models, AI, rendering, outputting analysis, statistics, GIS, etc.

Have technology to model individual people, often don’t have the data. Generate synthetic populations, downscaling larger data sources to individual level. Gives characteristics to our agents. Customer loyalty cards, GPS, cell phones.

Physical modelling and rendering

“Small-scale geography from motion capture and motion editing”

Record spatial and temporal information in studios, 100 frame per second, spatially in order of a few cm. Graph of movement of skeleton through time.

Physical simulation, doing bad things to the modelled skeletons.

“Behaviour is simulated (!= scripted) using computable brains”

Using a turing machine, socio-communicative emotional agent based model, wrapped in geographic information. Given GIS functionality. Geosimulation.

Taking basic model and wrapping in geography. Agents can “see”, can deploy mental map, plan past, parse to waypoints, navigate to goals. Identify what they’re interested in, they can steer, locomote. Use motion capture date.

Should be able to drop them in a city and they’ll get going.

Data relies on GIS, video of a 3D model of a city. Space-time signatures, space-time patterns. Given someone’s usual geo behaviour can figure out all their possible directions.

Social network monitoring. Monitored children on a campus every day for three years, watched how they formed groups and how they play. High performance computing. Binary space partitions.

“Applying this to real world issues”

“Quotidian crowd dynamics”

Screen scenes. Pick one person and follow them.

Showing video, model of people. Old people, young people, drunk people. Each behaving autonomously. Positive feedback, negative feedback, all sorts.

“Extraordinary scenarios”

Building evacuations, bottlenecks.

Run through space-time GIS, look at egress behaviour, if they run more people get hurt.

Urban panic, out of buildings into urban environment, look at how they evacuate. Showing video people jogging.

More diagrams and graphs.

Dynamic density map.

Riotous crowds. Standard riot model and wrapping with geo-spatial exo-skeletons. Generates a riot. Can test for clustering to see if people with similar motives and emotions are grouping. Small scale riot behaviour turns to large scale very easily. Devious behaviour, rioter see police and pretend they’re not rioting, run away when chased. Inserting police who are told not to arrest people will calm the crowd but not completely.

Crowd response to invasion of non-native stuff. Showing Cloverfield.

Small scale epidemiology of influenza.

Zombies.

Putting crowds into digital environment, what happens to location based services.

Technorati tags: geosimulation, simulatation, modelling, crowds, where, where2.0, where2008

-

Where 2.0: Best Practices for Location-based Services

Sam Altman

Last year concentrated on our social network mobile applications. Can share your location, get alerts when you’re near people, geotag flickr photos, etc.

Idea is to use location.

As we developed service we discovered why live location apps suck so far.

Talking today about what we’ve observed is the problem and how we’re solving it.

When we hear about people doing well with location tracking we cheer each other. Loads of devices and apps coming out for location.

Location Challenges Today: What we want

- Inexpensive

- Low friction access

- Relevant accuracy

- Strong privacy

- Availability and Reliability

Structural Challenges

- Cost

- Availability

- Privacy

Doing 300 pings a day of user’s location, costs a few cents per go, we need to reduce this 100 times.

Loopt Core Location Platform

- Mobile Client

- Location Privacy Manager

- Location Server

- Location Access Manager

Mobile Client

Need a client device to let you do location in any number of ways. Can use SMS, GPRS various ways to request your location. Clients only around for certain classes of devices. Runs at lowest level.

Privacy Manager

Consider privacy a lot. Do a lot to ensure your location doesn’t go to everyone. Ways to manage this: Global on/off, app by app, restrict by time, day, location. Set/obscure location. Can set on mobile or by web, for accounts, sub-accounts, many options.

Mobile Location Platform

Trying to get to per-user per-month models, getting as close to free as possible. Ads.

Access Manager

Current Location APIs are very bad. Trying to replicate the quality of Apple’s API. Only specify when the phone leaves an area. Only access location using a web app, or a wap app, choices for building apps, can access in number of ways.

Believe Loopt have the best location platform the world has ever seen.

Solving the problems with:

- Structural Challenges

-

- per user per month model

- Availability

-

- open APIs, easy to access

- Privacy

-

- end user control and management, device and 3rd party app integration

Best Practices for Location-based Services

Technorati tags: loopt, tracking, privacy, where, where2.0, where2008

-

Where 2.0 - Live Blogging

As you’ve probably noticed I’ve decided to have a go at live blogging the talks at Where 2.0. These notes are taken live so there’ll be lots of spelling mistakes and possible errors. I’ll try to go back and clean them up later but hopefully they’ll be useful for people who can’t be there.

Break now, I really need one!

-

Where 2.0: The State of the Geoweb

John Hanke

Fourth Where 2.0 I’ve been to.

At their best mapping and geography make the world seem like a slightly smaller place. Opportunity for all of us… we should keep that in mind.

The idea that is shared by a lot of people that geography is a really useful lens through which to look at data about the world, that geography and maps are a really useful way to organize information we’re looking at. That mapping data provides context for the information once we find it.

We’ve been talking about things like the idea of a geoweb, that would allow us to search and interact with this level of geographic data.

Would like to talk about progress, collectively, that we’ve made. Also talk about some of the challenges.

May 2007 - Showing a picture of geographic information that was available on the web a year ago to now, showing over 300% growth in the places and annotations that they’re aware of. Much more densely covered. Not just where you’d expect, but also into the long tail of the developing world.

More and more rich media, millions and millions of geocoded photos. Panoramio, Flickr, youtube, 8 hours of video every minute uploaded, can be geocoded.

Almost drowning in a sea of geodata, though that’s a good thing. A while ago turned on geo search in Google. KML files. Incorporated into google maps. Can search across user generated and geocontent in addition to yellow pages. Made it much broader and globally relevant. Recently added to google maps mobile. Can render KML on phone(!) Much richer portal to the world around us.

Product announcement

Geo Search API… Launching Today!

Search box on google api will now search this geo data on the web too.

Mentioning “donating” KML to the OGC. Happy to say that as of a month(ish) ago it was officially voted in as a standard. Now owned by the community, managed by OGC.

Giving props to MS for supporting KML.

Kind of a dark web of geodata - the world of GIS. Thousands of servers full of geodata that we don’t have easy access to.

Stamen did a mashup of Oakland crime data but it had to be removed as they screen-scraped the data.

Reached out to ESRI - “leader in GIS” - over a million seats installed in their software, 50,000 servers, 250,000 clients use their data.

Welcoming on stage Jack Dangermond founder ESRI.

Slide on context showing geospatial applications growing, going from research communities through GIS professionals and enterprise users to consumers/citizens.

Hundreds of thousands of organisations, billions of dollars of investment and content management, can be, should be leveraged into this geoweb environment. Haven’t seen the potential realised to leverage this power. The geoweb is evolving, going to make a big jump, ESRI are engineering the 9.0 version of their software to plug in and become a support mechanism to the geoweb, providing:

Open meta-directory services, meta-data can be pulled off and integrated into consumer mapping environments, opens up to JS and flex APIs. Users can plug in GIS services directly.

Every piece of data on that server is going to be exposed on a HTML page that can be found by Google.

[Live demos]

City of Portland, searching for the Portland map server. Can pop up a metadata page which has been scraped off of the metadata service in the server. Can zoom in and look at the HTML page that has been scraped with lots of layer information available. Zooming in finds neighbourhoods, parcel data, sewer and water data. Whatever an organisation chooses to serve. Distributed services in the form of models and analysis. Can calculate on distributed server the drive times from an address and retrieve the polygon in KML. Can merge them with demographics, generate a demographic report. Can search and discover not just content but can reach in and get the live data. Important note, this is all coming out as KML.

Second demo showing “heatmap” of climate change. Next hundred years, being animated (slowly) off the server. Mid-west getting warmer. 5-10 degrees increase. Mashup with JavaScript mashing up analytic server with google maps.

Great that you can get information on a single site, but having it accessible on the geoweb allows it to be blended into a sea of mashups. These kinds of data can get out to a bigger audience. Big step forward. Integrating the geographic science with a collaborative environment. Should change our behaviour, if we’re fortunate. Requires collaboration from everyone in this room. Greenmaps.

Fires in southern california, my map created by UGC. Data being pulled into google earth, mashed up with aerial imagery of the smoke/fires. Showing burn/fire line, model of where the fire will go, all displayed in google earth. Knowing where the fire was going was missing, but now possible? Mashing up consumer geo stuff with commercial GIS is great. Can find the evacuation routes, road closures, all being generated in real time. Allows the data to be served out in these open formats.

This is all part of 9.3 which is all launching in a few weeks.

Google very excited about this with ESRI pushing to make this happen. Huge opportunities. Further expansion of the geoweb.

Questions…

… how quickly do we/ESRI expect people to take up the software?

Not sure, but adoption rate on new releases is months to 6 months. Software adoption isn’t the only issue, also “do I export my data?” Some agencies will be very interested in providing this public service of their knowledge, others won’t be. As this evolves, there’ll be the role for outsourcing public data by private companies. Two decisions, technology adoption and “do I release the data”? It’s now “one click” for people who do want to give their data away.

-

Where 2.0: From Data Chaos to Actionable Intelligence

Sean Gorman

[First slide was missing]

Three trends: Geoweb, handling large datasets, emerging semantic web

Story began as: Trying to GeoHack Without getting arrested

Sean started as a geographer but didn’t want to be a geographer really, looking at conceptualizing the internet, what the router graph looked like, etc. etc.

Big Data Sets -> Algorithmic Analysis -> Map Logical Results to physical realities

Made for some pretty interesting maps - “How to take down NYSE?”

Men in black suits turn up.

IN-Q-TEL came to the rescue, big history of taking ideas to market from the academic and start-up culure. Specifically in the geo-web. Have taken others, MetaCarta, keyhold, Last Software.

Tip of the iceberg that the role that the government has played to take these to market before there’s a big market, like GPS, satellite imagery.

Geography on the web became mainstream as we’d been playing around with geography of the web.

We’d always been focused on really large datasets. Stuff coming out was based on small

Geocommons - “Crowdsourcing large structured data sets with quantitative capabilities”

“Didn’t we try this last year”

“What happens when your database reaches 1,683,185,246 features”

Database goes a bit kaput.

Why so fast? Mainly it’s been on the long tail, we’ve been working on the short tail. 95% of the data

Data Normalization -> Tables get big -> Optimization -> Data Ingress -> Repeat

Everything helped but nothing really solved the problem.

Continue to fight

or

Build a lightweight object database

Can fit well over 1 billion features into ~16 Gigs of storage

Launching “Finder!”

Can search and can add your own data.

Link GIS and the real world.

[Demo]

Describe data, tag it, URLs, metadata URL for proper GIS marker, contact information…

Data was mined as it was uploaded. Can see statistics based on the data after uploading.

Can pull data out into KML, GML. Can view in Google Earth, MS Virtual Earth.

Links to metadata, FTGC[?], iso standard, micro formats.

Showing a KML file in virtual earth.

Census demographic information can be overlaid with sales information entered as part of the demo.

Can download the demographic information, pull it into google earth, can also pull it into GIS workflow.

[Demo showing polygon areas highlighted in colours on google earth with masses of information in an info window]

Expanding to: Maker! - ? Atlas! - share maps around stories and collaborate

Talking to other silos of data about trying to federate our data and interconnect it. Where the cloud and the data can be owned by everyone.

- Bringing a new class of content/data to the web

- Intelligently….

- Enable the content to answer meaningful questions for users

From Data Chaos to Actionable Intelligence

Technorati tags: geocommons, where, where2.0, where2008

-

Where 2.0: Merging Roadways: PC and Mobile Maps Coming Together

Michael Howard - Nokia

Nokia maps 2.0, mapping and routing in > 170 countries. “To be installed on 40 to 60 million gps handsets and close to 100 million location-aware devices.”

Commercial transactions in >100 countries per day.

Walking, pedestrian routing. Some phones have compass built in to tell which way you’re looking.

What’s next?

Innovation on “Drive, move, discover, create&collect;, share, meet”

Mobile, guidance centric. People get wherever they want to go regardless of transportation mode.

How they can do this by combining service across the web and mobile..

Video, music, woo!

Get rid of the idea of “mobile internet” and “internet”. There is one internet. This is a service that is complementing the mobile experience, not replicating it. On the phone, collect cool POIs, go back to the web and [upload?] them.

Sportstracker

-

Where 2.0: EveryBlock: A News Feed for your Block

Adrian Holovaty

Everyblock - Five EveryBlock lessons

“When I was your age we had to reverse engineer Google’s obfuscated JavaScript just to get maps on our pages!”

Mentioning Chicago crime maps, how we had to do things before the API was available.

Coolest and most useful part was every block in Chicago had it’s own page, listing all the crimes on the block. This made me think we should be able to do the same thing but bring in all sorts of other stuff that’s related, literally just for that block.

2 year grant from the Knight Foundation.

Chicago and NYC, expanding..

Can see every crime, business licenses, building permits, all this stuff is news to you if you live on the block.

Zoning changes, if a restaurant wants to build a bar, that’s very relevant to you. Filming locations. We also geocode news stories. Reviews, flickr photos, all sorts.

1st Lesson - “What is refreshing about Everyblock is that they’re feeding me existing data in an interesting way instead of asking me to give them my data”

Taking advantage of existing data, rather than the usual “give us your data” stuff.

“Be nice and appeal to civil servants”

Don’t bash people even if you’re improving their crappy site.

“Governments should focus on services [not on data]”

They shouldn’t have to concentrate on the cool site for viewing the data, they should just make sure they can provide the service for you to enter data.

“Plot cities/agencies against each other”

If one city is giving you good data, tell other cities.

2nd Lesson “The more local you get, the more effort it takes”

Slides shows “Convenient centralized USA building permits database” - on a clipart PC screen. Unfortunately there is no nice central database.

Lesson 3 - “Embrace hypertext”

Showing a blurred crime mashup, can restrict to show just certain crimes, certain dates. Not “webby” because you can’t link to results.

“Will my site work without maps?” - Chicago crime did because it had good text.

“Permalinkability”

Lesson 4 - “Move beyond points”

Not every story has to do with a point, some have to do with a neighbourhood. Don’t do a crappy job by choosing a centerpoint. Showing highlighting areas using polygons, or a part of a road using a line.

Can restrict zoom levels if necessary

Lesson 5 - “Roll your own maps”

Look at what Google give you for instance, they put building outlines, subway stops, one way arrows. Do you specifically need that for your mashup? You have no control with google maps over the colour of water, the colour of streets, the size of streets, language, etc. etc.

“One size fits all”

Would you seriously go to your web designer and say “yeah for our corporate website, use some standard wordpress template”

At every block we use mapnik to generate tiles allowing us to use our own styles, our own colour of blue. We use tile cache, openlayers and django.

No building outlines, no one way streets, basic style matches everyblock’s design.

‘Search for “take control of your maps”’ to see an article by Paul Smith from every block about how to do this.

Questions

Q: How are you going about managing relevance for users, allowing them to specify what they’re interested in?

A: They can say what schemas they’re interested in, to select don’t show me restaurants, only show me crimes. Can’t go further into schemas but we’re looking to add it.

Q: …. question concerned about privacy issues, a crime in a house

A: We only geocode to block level to partially help that. Also the cops have a limit on what they give out in some cases, for super sensitive stuff they don’t provide it. We mention this on the site. We don’t provide any names, e.g. for buyer/seller information on properties.

Q: … talk about the experience of parsing locations out of text

A: Kind of a big cludge, thinking that we solve things by throwing different methods at it rather than it being perfect natural language processing. Every time we find a new way of specifying an address that we haven’t handled before, we add it.

Q: Can you give us an idea of why mapnik for instance, rather than mapserver?

A: Would have to defer to Paul Smith, guessing it was about python bindings. Font rendering too.

Q: How many cities and how do you make money?

A: Funded by grants for 2 years, don’t know after that. Grant, VC, magically dream up business model? All the code will be open source. Not an incorporated company, just a bunch of guys in chicago. Not telling how many cities.

Q: Is there enough awareness of the project that cities are coming to you offering to help?

A: Yes in some cases, news organisations are asking how to format articles, bloggers too

Q: How do you get boundaries?

A: We get it from the government. Easier in the US, can be harder in other places, costs a lot in Germany for instance.

Where 2.0: EveryBlock: A News Feed for your Block

Technorati tags: everyblock, where, where2.0, where2008

-

Watch my Journey to Where 2.0

I’ve managed to knock something up that will let you track my journey from Liverpool in the UK over to San Francisco in the states. I do know I won’t be traveling the furthest but I still thought it would be fun ;-).

I’m currently (Friday lunchtime) sat in my house in Liverpool. I went for a run this morning so there is a little data in there already. I’ll be traveling down to London tonight and then going over to Heathrow and flying to San Francisco around lunchtime tomorrow. I’ll try to log my location as often as possible using the various means at my disposal. I have quite a few ways to do it so I’m hoping I’ll manage quite a good trace. I doubt I’ll get much if anything while I’m in the plane but I’ll give it a good go! Also I’ll be updating my position while I’m in California so it should end up with a full log of my trip over there. Finally, the page will automatically update itself every 5 minutes to see if my location has changed.

http://johnmckerrell.com/map/?show=john

Note the times at the bottom, “Last checked” is the last time that the page checked for an update and “last updated” is the time that I last sent it my position. So take a look and let me know any comments you have, especially if you find a bug! I may enhance it if I get time so you might want to refresh it as well from time to time.

One other thing to mention, even when I’m accurately updating my location with my GPS I’ll still only be updating once every minute, also that page isn’t capable of updating more than once every five minutes. I’m caching the location rather than using a database lookup to make it run a bit speedier. Oh yes, I do know the arrows don’t work in IE, unfortunately VML is not capable of drawing them.

Technorati tags: javascript, where, where2.0, where2008, map, tracking, wherecamp

-

Three month update

Wow, before starting this blog post I thought I’d check the date on my previous post. I haven’t written anything in nearly three months! That’s pretty terrible so I’ll try to do a few more posts sometime. I’ve been pretty busy with a number of other things recently but that’s no excuse, I know interesting things have happened that I should have found the time to mention.

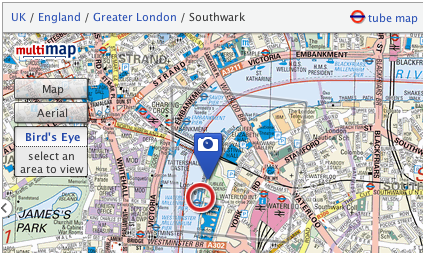

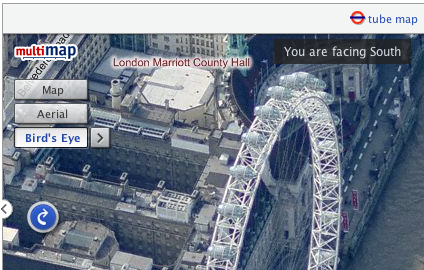

I guess the biggest of the things that have been restricting my time recently was the recent redesign of the Multimap.com site and the availability of Microsoft Virtual Earth content within the Multimap API. Oddly enough, this was the third time that I’d worked on a project to pull VE content into our API. The first two times we had done it by wrapping VE’s API into our own, passing function calls to our API onto VE’s where necessary in a similar fashion to OpenLayers Base Layers. Though this gave us access to VE content and allowed us to resell the content to the UK Yell.com site, it was never going to be the best method. The finished product required loading the entirety of both APIS (nearly 300KB just for JS), and because we only had access to exposed functionality resulted in some odd behaviour, such as when our API and VE’s were both trying to smoothly pan a map to a new location.

In December of course we were bought out by Microsoft and as a result were able to get direct access to their imagery. Making use of VE’s maps and aerial data was fairly simple but including the Bird’s eye imagery was a little more difficult. Fortunately with the help of a few guys at MS who sent through some source code, and with the existing good “custom map type” functionality in the Multimap API, I had a good working model pretty soon. We then spent a long time trying to come up with a really good way of communicating “Bird’s Eye” mode to users. Though we had trials and tribulations along the way I think we did pretty well with the solution we came up with, it’s live on multimap.com if you want to take a look (or see the screenshots above and below).

Another thing that I’ve been trying to get sorted out is the server that hosts my blog. I’ve been using the same dedicated server for a good 4-5 years now. That server was hosted at Sago Networks and it’s been through a lot, including Florida hurricanes, but has recently started hanging on a regular basis. A replacement NIC a few weeks ago gave promise of a reprieve but it has again crashed since then. Though I’ve had really good service from sago who have been happy to manually fsck it every time it went down recently, I decided I had to go with price and have now switched to Hetzner. Their prices were too good to miss, and though the fact that I don’t speak a word of German has caused a few issues along the way everything seems to be going well with it now.

I’ve even decided to be all modern and am hosting my site on a VMWare Server virtual machine. I’m hoping that this will allow me more control over that machine, making it safer to upgrade and reboot as I’ll always be able to get access to the console through VMWare. Hopefully it’ll also make things much easier when I eventually decide to move to a new server again (I’ve had this new server for a month now and have only just found the time over the long weekend to move everything across!) I’m also hoping it will lend me a little more security by allowing me to segregate important sites that I need to keep secure away from older less reliable code.

One other thing to mention, I’m going to San Francisco next week! Where 2.0 is the second most important conference for location based services providers (the most important, of course, being OSM’s State of the Map) but in past years I haven’t been able to attend. Fortunately this year some budget has turned up and I together with four of my colleagues will be there. Though the main event is the O’Reilley conference, I’ll also be going along to WhereCamp 2008 the following weekend, and as many other events as I can cram in on the Thursday and Friday between. If you’re going and I don’t already know it then get in touch with me on twitter or friend me on the WhereCamp site.

I’m also intending to map my journey there as much as possible, I have to get from Liverpool to London on Friday night, over to Heathrow on Saturday morning and then fly to San Francisco International airport at lunchtime (leaving 10am, arriving 1pm, still freaks me out ;-). Obviously it’s highly unlikely that I’ll be able to log anything while I’m flying but I’ll do my best, and I shouldn’t have too much problem on the train down. Check back later this week when I’ve figured out how I’m going to share my location with you :-)

-

Apple invents iPod maps - podmaps?

The other day, a friend sent through a link to an interesting “new” technology that Apple have applied for a patent for - podmaps. Reading through the article it seemed oddly familiar, perhaps because I came up with the same idea two years ago. As most good ideas do, this one came about over a few beers when someone suggested that Multimap should do a podcast. Of course the idea of a Multimap podcast was perhaps a little odd, but it did get me thinking about what we could do with podcasts.

After a little investigation and playing around with Apple’s “ChapterTool” I knocked up the Multimap Travel Directions Podcast. You should be able to try it by downloading the file and launching it in either iTunes or Quicktime. It takes advantage of all the great features available to podcast files. The file is split into “chapters” with each chapter being a step of the route.

After a little investigation and playing around with Apple’s “ChapterTool” I knocked up the Multimap Travel Directions Podcast. You should be able to try it by downloading the file and launching it in either iTunes or Quicktime. It takes advantage of all the great features available to podcast files. The file is split into “chapters” with each chapter being a step of the route.  Each chapter has audio with a computer voice talking you through the directions for that step, an image attached to it which shows a map of that step, the text is included as the “title” (and is readable on your iPod’s screen) and there’s even a link to the route on multimap.com for when you’re viewing the podcast on your computer. (Note that the podcast above was made 2 years ago so uses old maps and probably links to the old multimap.com, keep reading to hear about the improvements though…)

Each chapter has audio with a computer voice talking you through the directions for that step, an image attached to it which shows a map of that step, the text is included as the “title” (and is readable on your iPod’s screen) and there’s even a link to the route on multimap.com for when you’re viewing the podcast on your computer. (Note that the podcast above was made 2 years ago so uses old maps and probably links to the old multimap.com, keep reading to hear about the improvements though…)So this is all very nice, but this thing took me a few hours to make by hand, surely there’s a better way? Well with all the great APIs that Multimap provides, yes there is. Though I was busy with a few other things I played around with this idea over the following 15 months or so. Every few months I’d write a few lines of code, come across a problem, get bored and put it down again. Finally though I got past all the problems (how to tell the length, in seconds, of an audio file, how to concatenate audio snippets, how to convert to AAC, and a few more) and managed to knock up a ruby script that could take a source and a destination and give you a podcast containing the directions. That was 9 months ago though and I’ve been sitting on it since. Seeing the news on Apple’s patent application has spurred me on to releasing it.

I spent a few hours last night updating the script so that it now works with our recently launched Static Maps API meaning that you’ll see a vast improvement in the map quality. The script requires OS X to work because it uses Apple’s ChapterTool and “say” command. It also needs SoX (for various sound conversions), the Perl CPAN module Audio::Wav and the FAAC library. I’ve packaged the script into a zipfile and put a README in there with some information on using it, I mention how to get and install the 3rd party packages in there too.

There’s obviously lots of improvements that could be made to the podcast, and I’m sure Apple will make many if they actually do release “podmaps”. All of the podcasts I’ve generated here have been made on my OS X Tiger installation, if you have Leopard you will be able to use the new speech synthesis voice that came with it to make better sounding podcasts. All the routes generated are currently driving directions but it would be a simple tweak to make walking directions. Extra functionality could also be added using more Multimap API functionality, such as highlighting places to eat as you’re passing them and things like that. For now I just thought I’d release it as-is and see what people think.

So, please download the script, play around with it, and let me know in the comments what you think of it. It might be fiddly to get it working but rather than get too technical in this post I thought I’d put that sort of thing into the README files. If you haven’t already, you’ll need to sign up for the Multimap Open API to get a key to use. Here’s a few more routes to give you an idea of what it can do too:

Technorati tags: apple, ipod, podmap, routes, map, multimap, maps

subscribe via RSS or via JSON Feed